Software Quality Assurance (SQA) is of utmost importance for any high quality software development process. Buggy software may harm your reputation, sometimes irreparably. In this blog post you will learn about the principles of QA, testing strategies for ensuring quality in software and the benefits quality assurance can offer a business.

What is Quality Assurance in software development?

Let’s start by explaining the main objective of QA that lie behind any successful product release. First of all, quality assurance is not only about testing and correcting bugs. It is the overall process of putting quality management into software development. It differs substantially from quality control, which is a one-time action.

To deliver a project successfully, QA needs to be performed from the outset. The sooner the QA process is begun, the better the final product will be. It goes without saying that fixing bugs that are discovered when software is already in production will cost you money, a lot more of it than when the bugs are discovered early on.

What’s more, to do Quality Assurance properly, QA engineers have to work closely both with software developers and with business. Developers should share the product’s technical specifications, while business should clearly explain what clients expect from the product as well as what its business goals are. Only when they are armed with this knowledge can QA engineers begin to work comprehensive care into the QA process.

The Difference between Quality Assurance and Testing

While Quality Assurance focuses on the testing process and ensures that the program can function under the provided set of conditions, testing focuses on case studies, implementation, and assessment. Shortly, QA deals with prevention and testing with detection.

Moreover, testing is a part of quality control. It contains a variety of methods for detecting software problems. The purpose of testing is to ensure that all reported problems are properly fixed with no side effects.

Also, QA goes through the whole process of the product life cycle. It manages quality control and checks if the requirements are met, whereas testing focuses on bug finding and system inspection. The main differentiator is that QA is process-oriented, and testing is product-oriented. Managing the process in QA should have a preventive aim. On the other hand, testing aims to correct the already existing issues.

If you want in-depth information on this topic, read our "Quality Assurance and Testing" article.

Classification of software testing

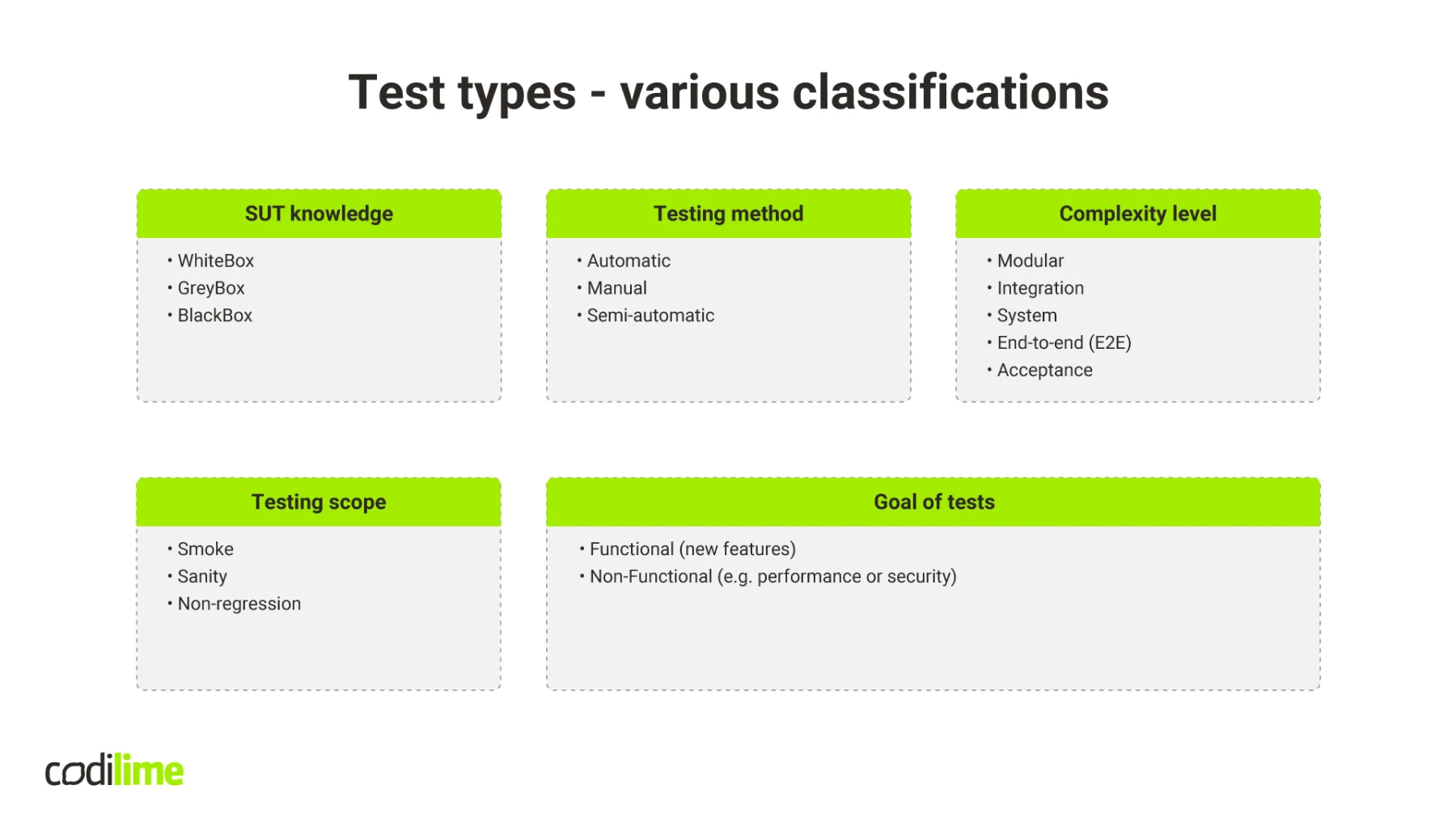

So now that we’ve laid out the main principles of a successful QA process, let’s take a look at the types of tests that are performed in software development. They can be grouped according to the knowledge about the System Under Test (SUT) the software testers have.

With white box testing, they have full knowledge of the software they are testing, its components, features and the relations between them. It is usually the case when this software is being developed.

With grey box testing, you have some knowledge about the solution architecture, but it is not entirely clear how it was coded. Such may be the case with hardware, such as a router. You know how it’s built, what its features are and what software is on it, but you didn’t write the software.

Black box testing, on the other hand, is when you get the SUT to test, but you do not know how it was designed and works. In this case, tests are performed from the point of view of the end user.

There are three types of testing methods:

- manual testing,

- automatic testing,

- in-between class.

Very popular until recently, manual testing is where a tester has to walk over all test cases in a testing scenario, collect data and prepare a final report. It is hard and time-consuming work. In automatic testing, on the other hand, automated tools and scripts do the entire job. The data are collected automatically, as are the final reports. In the half-way scenario, the tests are performed automatically, but the whole testing environment is set manually. This is semi-automatic testing.

Software testing types — complexity and scope

Complexity of Testing

The complexity of the testing depends on what is to be tested and at which stage of the software’s development. In module testing, one module or feature is tested. They are usually written by a developer coding this particular feature, and with the express aim of determining if the feature works correctly. Integration tests are done to check if a given component or module can communicate with other components and modules in the system. System testing is performed to verify if the entire system under development is working as anticipated.

End-to-end testing (E2E) is done more from the end-user perspective and seeks to determine if the software meets their requirements. Acceptance tests, meanwhile, are different from all the rest. They are performed by the client that has to accept the software product and verify whether it meets the requirements and business goals. It is a good practice to define, in a contract, the scope of acceptance tests and how they should be performed.

Scope of Testing

The scope of testing is another differentiator. Smoke tests throw out a wide but shallow net. They check the simplest scenarios to know if a particular product works and if it makes sense to perform more thorough testing. Sanity tests are commonly used to check if new features work as planned and if they comply with other system components to maintain backwards compatibility.

On the other hand, the backwards compatibility itself is the scope of non-regression testing. It verifies if the new release of a product is compatible with previous versions—if such compatibility was required to begin with. In other words, you need to be sure that an upgrade to a new version will not cause any problem in software that is already operational.

Finally, tests can be divided according to their functions. Functional testing, as the name suggests, checks a given functionality, while non-functional testing looks at specific areas in the system, such as performance, security, scalability or stress testing. All test types—see Figure 1.

Fig. 1 Test types—classification

Test plan—the path to success

First steps

Before starting a testing process, you need to answer a few questions. First of all, what is a System Under Test (SUT)? Second, what is the scope of testing (or, in other words, what is to be tested)? A test plan for security will be different than for performance. It is also important to know the architecture of the solution. If you know it, how detailed is your knowledge? Where can you find out more? The more you know about the architecture, the better you can design the test to check exactly what is required.

Moveover, before the testing starts, you should know what the expected outcome is. In other words, it is necessary to have a clear definition of done, i.e. criteria based on which you can decide if the testing has been finished. Without them you could end up in an infinite testing loop.

Adjusting the Testing Method

The next question is how detailed the tests should be. In some cases, thorough testing is required for Software Quality Assurance, while in others only the most important features need to be checked. What’s more, the testing method should be defined. Some clients may focus on automated testing, but such an approach may not always be feasible or profitable and human testing may be needed. In such cases it is necessary to outline what can be done automatically and what must be done by human testers.

The ultimate goal here is to perform all the tests the client requires. Ideally, the testing method should be adjusted to the SUT. It is also often the case that the client has some limitations, including a lack of time and/or resources, as well as project specifications. This factor should also be included in the test plan.

Research the Previous Tests

Finally, it is good to know if the same or similar tests have been performed in the past. If yes, how did they turn out? Are the data and results available? Do we have previous test plans? Nobody likes pushing at open doors or doing the same thing twice. If there are previous tests results, is it possible to check what went wrong and why and to amend the current test plan accordingly?

An ideal Software Quality Assurance plan

The ideal Software Quality Assurance plan should contain the following steps:

- A clearly defined goal

- The expected result

- A definition of project success and failure

- Input data

- A description of the testing environment

- A list of required resources and the relationships between them

- Initial settings of the testing environment with defined parameters and variables

- Steps needed to perform tests

- Time of testing (if applicable)

It is good practice to create a test plan that is easy to follow, so that everyone reading it can perform the tests and understand the results that are expected.

An ideal test

Every test plan consists of a series of tests. Each of them must meet certain technical requirements to be considered correct. The ideal test should:

- Reflect product or project requirements

- Take into account possible limitations (e.g. time, resources, project constraints etc.)

- Verify both the expected results or possible errors (e.g. errors in input data)

- Set prioritization and dependencies with other tests

- Define initial and final conditions (in the case of automated testing, also define automatic verification of the results)

An ideal test may also define the testing method (i.e. manual or automatic), though this is not strictly necessary, as this method should be adjusted to the feature/product to be tested.

Prioritization and dependencies between tests

Priorities in Testing

Usually, SUT is composed of many features, components or modules. Some of them are core ones, while others are less important. When doing testing, it is essential to test the core features, because without them the product won’t work. Minor functionalities can be omitted, if time or resources are lacking. What’s more, there are different tests for checking single features.

Dependencies in Testing

To give the testing plan better visibility, such tests can be grouped into so-called test suites. The test invocation may not make sense because of the previous test failures. In that case we should use common sense and analyse these dependencies and remove such a test from the current test suite scope. It can also be done in the case of automatic tests.

Finally, if some corrections were made in SUT, they need to be verified by performing an adequate set of tests. Together, these principles optimize testing time and can be applied at the stage of advanced testing or in Test Driven Development.

Standards of software quality assurance

The ultimate goal of the testing process in software quality assurance is to verify everything that requires verification, with thoroughness the key standard to be met. The client’s requirements such as user stories, system specs, business and system goals, and expected results should be a starting point. They all have to be tested.

As a result, a final report on what has been tested as well as a list of all of the bugs that have been detected and ironed out should be produced. If product quality is to be monitored effectively, such a report is essential. It is also important that the test(s) performed should directly or indirectly result from product or project specifications. Some of them are laid down in the contract with a client, while others may result from common industry knowledge or simply common sense.

Software quality assurance—business benefits

As you already know basic Quality Assurance principles and testing types, it is time to ask another question of paramount importance: why does Software Quality Assurance pay off? Why is it worth investing in? Well, if the SQA process is done right—that is, from the very beginning of the software development lifecycle—it can help you save money on correcting bugs that were detected in software that is already operational. As a rule, the later in the process a bug is detected, the more it will cost to fix this bug.

Moreover, a sound and properly designed SQA process allows for proper software quality management of a product. It will help you verify if a newly developed feature or release is compatible with already operational versions. Nobody wants to see the software stop working after an upgrade has been released. It goes without saying that such a faulty upgrade can crush a company’s reputation, as its clients’ operations may be blocked.

I believe that these two reasons are enough to convince you that an investment in software quality assurance is an investment in the future success of your product. If you are interested, read more about QA trends.