Cloud-native Network Functions (CNFs), by all appearances, seem to be the next big trend in network architecture. They are a logical step forward in the evolution of network architecture. Networks were initially based on physical hardware like routers, load balancers and firewalls. Such physical equipment was then replaced by today’s standard, VMs to create Virtualized Network Functions (VNFs). Now, a lot of research is going into moving these functions into containers. In such a scenario, a container orchestration platform would be responsible for hosting CNFs. Yet CNFs are more than just the containerization of network functions. Their wider adoption requires substantial changes to what performs the network function (VMs or containers) and also to the overall development, deployment and maintenance lifecycle. Another crucial question is what to do with legacy systems, which sometimes play a business-critical role.

This blog post discusses how to incorporate CNFs into a VM-based environment. It describes a technical solution enabling the creation of a smooth connection between a VNF and a CNF using Tungsten Fabric. Our approach was originally presented by Jarosław Łukow and Magdalena Zaremba during the Open Networking Summit held on 23-25 September in Antwerp, Belgium. It is also a part of CodiLime’s broader research agenda focusing on CNFs. In another blog post in this series, we presented a business case where some VNFs couldn’t be containerized and had to be used in a CNF environment.

The current VNF/CNF landscape

Virtual Network Functions, despite being currently a standard network architecture, are still not free of drawbacks. Physical equipment was often moved into Virtual Machines in one-to-one proportion, creating single-purpose appliances or blackboxes which are hard to manage and maintain. It is possible to use such a blackbox only as it is, without altering anything or creating derivative works. So, it can be said that you have moved to the virtualized network functions that are more flexible than hardware itself, but these VNFs offer little flexibility. Above all, it is often impossible to scale up the legacy VNF, which renders the entire migration process pointless. With VNFs it may be necessary to perform a non-automated software release process when an app in a VM does not allow you to automate this process. Using VMs to perform network functions may also result in vendor lock-in, as you may become dependent on the virtualization platform provider. Changing a vendor is costly both in terms of money and labour. Further, deploying and testing containers is usually faster.

From this perspective CNFs might be a real remedy for the fundamental pitfalls of VNFs. Containerization of network architecture components makes it possible to run many kinds of services on the same cluster and to continuously on-board and decompose apps. The cloud-native approach to developing applications means that these apps should be easily deployable, testable, scalable and maintainable. Thanks to CNFs, these principles could be applied to NFV products, which would outperform current VM-based deployments. It will be also easy to test such an app using canary deployment: roll out a new release only to some subset of servers, test it, and then, if everything works correctly, roll it out comprehensively. These are the main reasons the market is pushing for the adoption of CNFs. In this context, it is important to mention Cloud Native Network Functions (CNF) Testbed, a common initiative of Cloud Native Computing Foundation (CNCF) and LF Networking (LFN), which enables organizations to test network functions as VNFs and CNFs and compare their performance and resiliency.

Adopting CNFs - the main challenges

To enable a wider spread of containers in network architecture, some important challenges must be met. These challenges can be discussed from the perspective of the global telco industry and from the point of view of a single company. To start with the global challenges, the companies that invested heavily in OpenStack-based NFV platforms do not see the CNF path as one to follow. This is easy to understand: they want to maximize the return on investment they once made in an NFV infrastructure. The second challenge is a more fundamental one. Moving to CNFs means that the whole infrastructure and applications need to be redeployed from scratch. This would be an enormous change with considerable investments, high risk and a long time to market. Finally, virtual functions should be reimplemented to be cloud native-ready and to have sufficient performance.

Approaching the adoption of CNFs from the perspective of a single company poses four major challenges. First, the software must be re-architectured, as not every VNF can be containerized. There are a number of reasons for that. For example, VM-based networking functions do not use external kernels at all, but are constructed as Unikernels. Or they are based on operating systems other than Linux. This brings us to the question of performance. When redesigning architecture, you must be sure that CNFs will perform similarly to VNFs. It is also true that ensuring adequate security is easier in VMs that are isolated and use their own OS than in containers which are simply processes on the host. To make containers secure, it is necessary to properly configure an orchestration platform, e.g. Kubernetes (to learn more about Kubernetes security, read our white paper). Finally, there is an open question of integrating CNFs with legacy systems. It is impossible to move every VNF into a CNF. It is simply too risky, too costly or in some cases outright impossible.

In our solution, we decided to integrate a CNF with existing systems to show that adopting CNFs does not necessarily mean that we stop using VNFs. Tungsten Fabric will be the link between the old and the new. During the ONF Connect 2019 conference, our colleagues Monika Antoniak and Piotr Skamruk presented a different approach to this problem (using Virtlet on Kubernetes to communicate with VNFs).

Tungsten Fabric as a link between VNFs and CNFs

The idea behind our solution is to provide a way to gradually incorporate CNFs into an already existing virtual network infrastructure. This would allow us to perform the evaluation, canary tests, interoperability tests and, of crucial importance, to gradually replace single services instead of replacing the entire network stack. Tungsten Fabric offers considerable advantages that can help make this scenario a reality. It can act as the SDN plugin for both OpenStack and Kubernetes (and also for other systems which are not relevant here). Most of its features are platform- and device-agnostic. TF can connect mixed VM-container stacks using a single controller and a single underlay network. So it is not important what lies beneath: whether it is Kubernetes, OpenStack or anything else. What Tungsten Fabric sees is only source and target API. Using TF also makes it possible to configure the same security policies both for CNF and VNF environments.

Seamless transition in practice

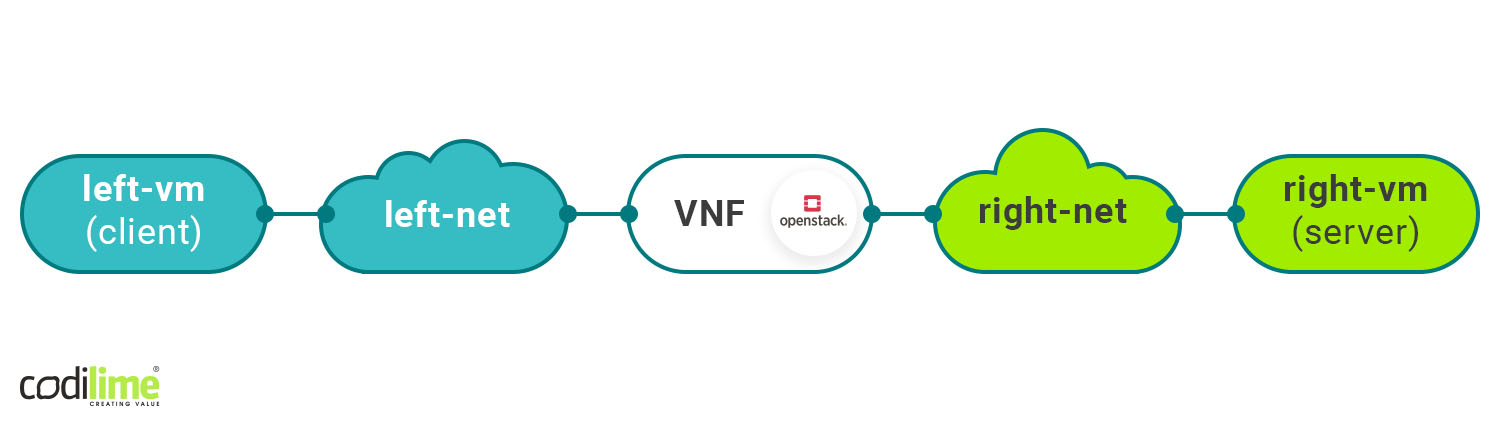

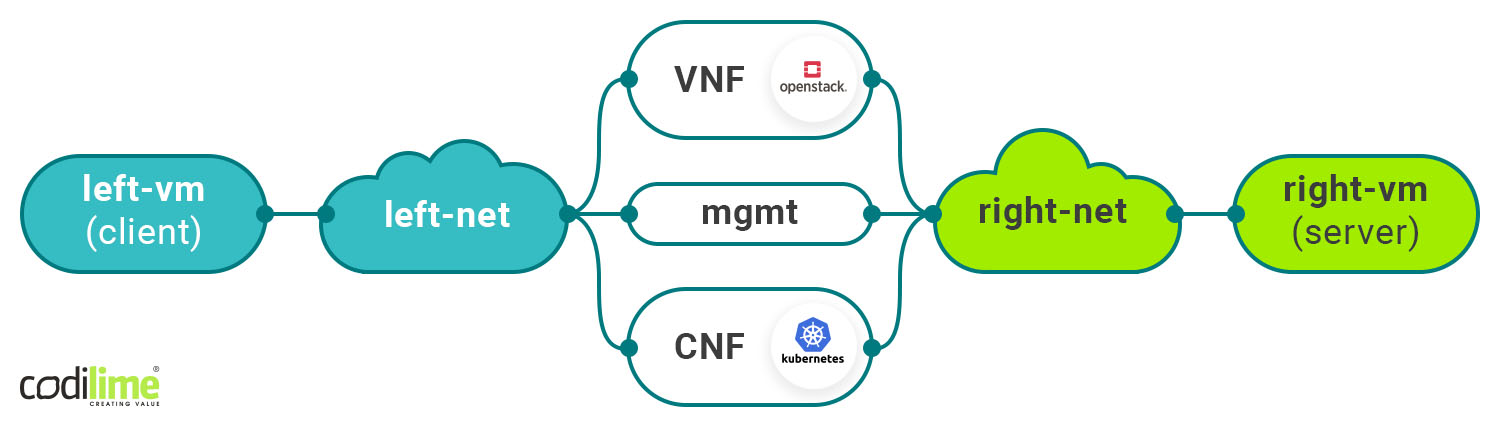

Let’s take a look at how this scenario with OpenStack, Kubernetes and Tungsten Fabric as the SDN Controller on top works in practice. We’ll first create a Virtual Network Function that will connect two virtual machines in OpenStack. The traffic will pass through it between left and right network (Diagram 1). This will be our starting point. We also have a CNF serving the same purpose as a VNF, and we want to incorporate it into our initial setup. Tungsten Fabric offers three approaches to do this.

Diagram 1. VNF on OpenStack

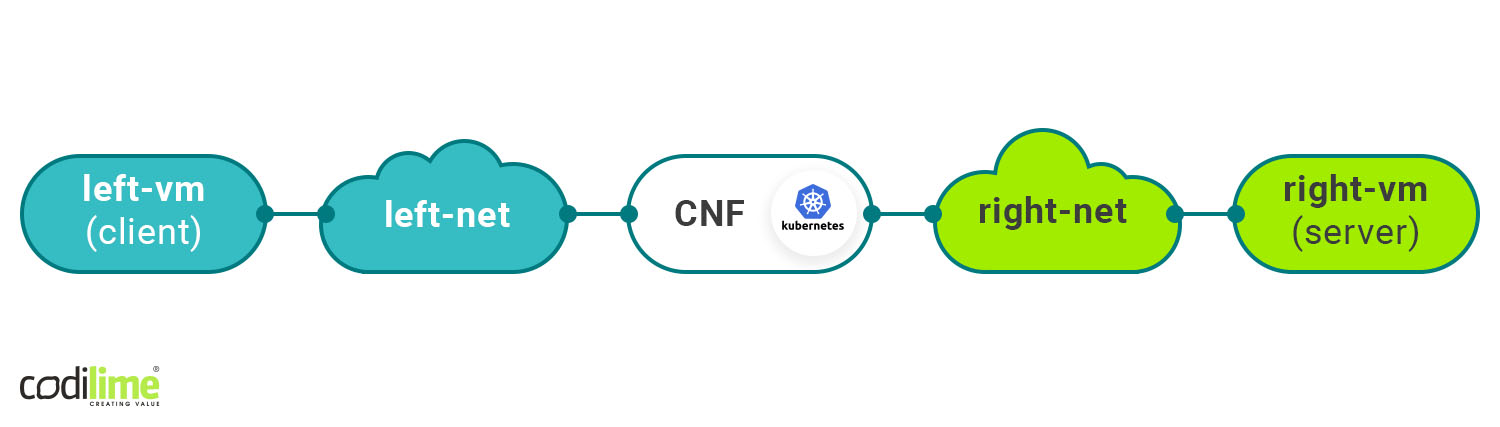

Firstly, we can exchange the VNF for a CNF by changing a port tuple in a service instance definition (in Tungsten Fabric) from one representing VM interfaces to a new one representing container interfaces (Diagram 2). Such an operation results in a temporary loss of traffic, as it is necessary to install new flows and routes pointing to container interfaces. Still, this scenario is quite risky, as we cannot be sure if the CNF will work exactly the same as the VNF.

Diagram 2. CNF on Kubernetes

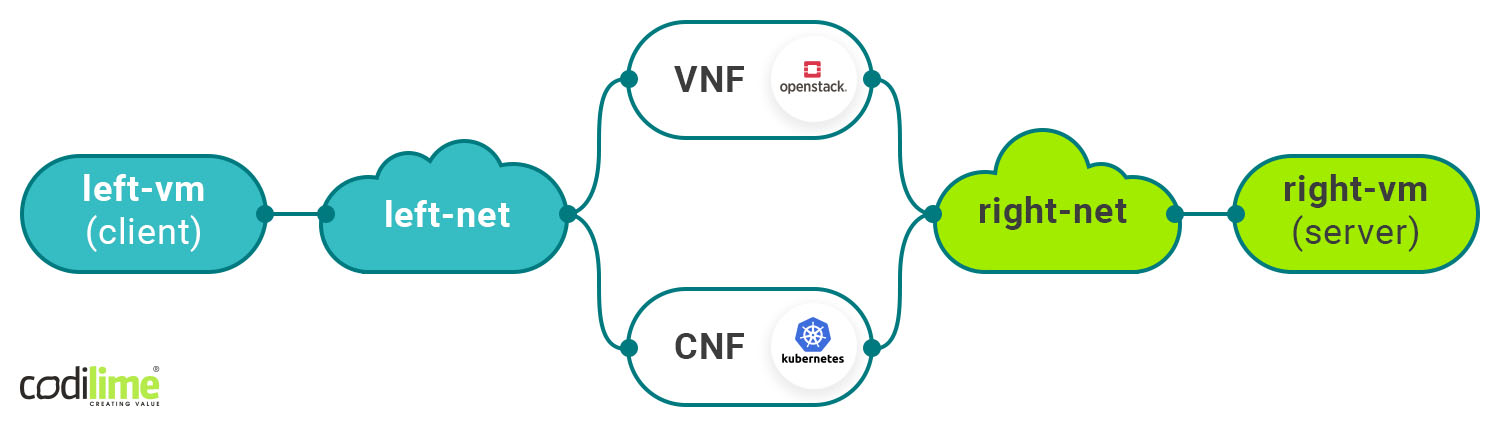

In the third scenario (Diagram 3) we can add CNF alongside VNF. By default, Tungsten Fabric will distribute traffic between both the container and the VM using ECMP. However, there might be a problem when those services are stateful.

Diagram 3. VNF and CNF in a multipath network configuration

In the last scenario (Diagram 4), we have the same network topology as in the previous scenario, but now it is possible to synchronize the state between the container and VM through management interfaces. This solves the problem of stateful services. If the CNF’s performance meets our expectations, we can disable the VNF by simply removing the port tuple representing the VM interface from the service definition. The container will take over without any noticeable change in the network’s behavior.

Diagram 4. In-service CNF swap

The really big thing about this is that Tungsten does not differentiate between containers and virtual machines. We operate on interfaces which are attached to different devices. They can be virtual machines, containers or physical devices. This gives us flexibility to configure the network to our needs. In this case we move the association from the virtual machine and add association to the pod. The traffic thus stops flowing through the virtual machine and starts flowing to the container. This is the simplest demonstration of how CNFs and Kubernetes pods can be integrated into a service chain with Tungsten Fabric. Such a configuration gives us the ability to gradually incorporate CNFs into the existing network architecture without the need to perform drastic changes to the network itself.

The demo with a life presentation of these scenarios can be found on CodiLime’s website.

On the horizon

As you can clearly see, Tungsten Fabric makes it technically possible to combine a VNF and CNF in one network and route the traffic according to current needs. Since it is device-agnostic, it is not important whether the underlying layer is a container, VM or a physical device. You are operating at the level of interfaces. Still, to move forward, it is crucial to create a unified management and network orchestration model (MANO) and ensure that CNF performance is comparable to that of VNFs. Such are the measures that will be required if the adoption of CNFs is to widen.