Since there is no separate networking object among Kubernetes objects enabling the running of multiple networks, a workaround is required. Using a Container Network Interface (CNI) is a good place to start. Read this blog post to learn how you can use it to get multiple networks for Kubernetes workloads. I also describe my proposal for changes in source code that will enable native handling of multiple networks in Kubernetes.

This blog post is based on the presentation which Doug Smith from Red Hat and I gave at the KubeCon+CloudNativeCon North America 2019 conference. The conference took place November 18-21 in San Diego, US.

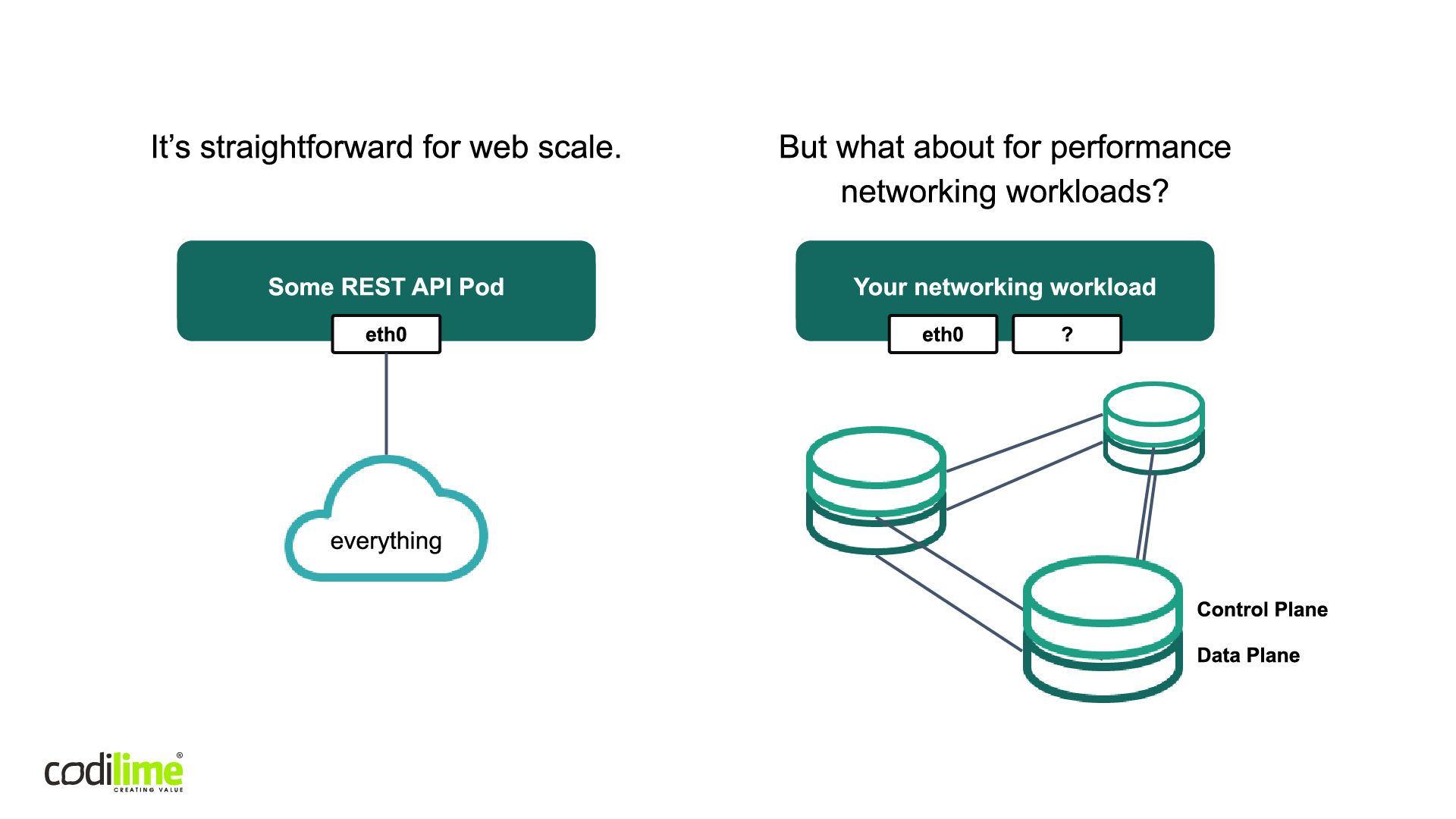

Just as containers and microservice architecture are becoming ubiquitous, so too is the major container orchestration platform Kubernetes. The use cases for Kubernetes are becoming more and more complicated, as users seek to deploy it in different scenarios, with networking among them. Unfortunately, in the simplest default configuration of Kubernetes, a pod is connected to only one network. Multiple networks are simply not supported. This is pretty straightforward problem when deploying a single app (see Figure 1). But what is to be done when we need to manage performance networking workloads?

Fig. 1 Networking for web scale and more complicated workloads

Unfortunately, Kubernetes itself does not provide us with a convenient solution, so a technique outside of the core Kubernetes source, one which will allow us to manage advanced workloads in Kubernetes, is required. So, it is time now to present the assumptions of the proposed workaround.

A workaround for more advanced Kubernetes workloads—assumptions

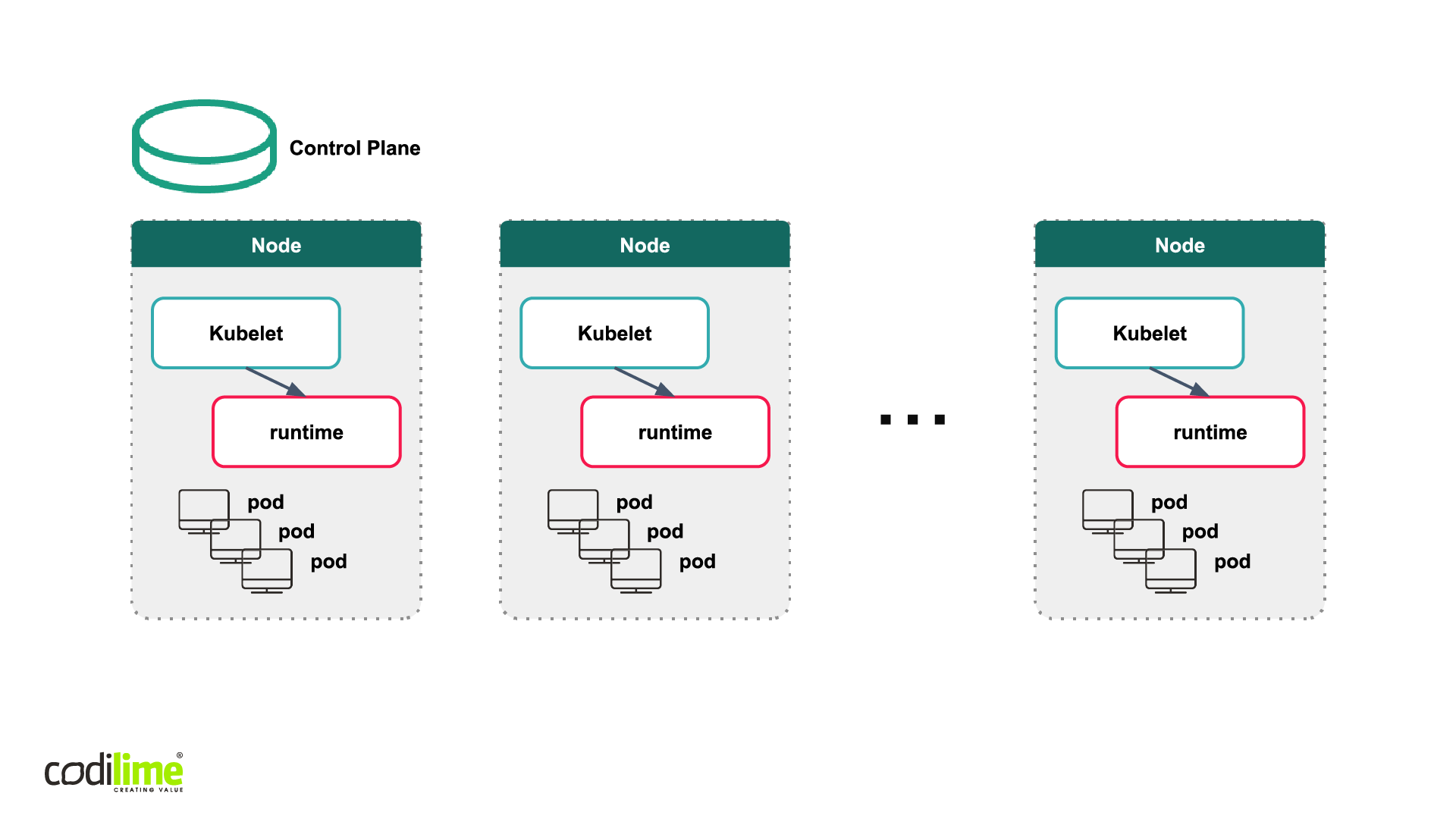

When projecting our solution, I assumed the following (see Figure 2):

- Each pod has its own IP address

- Each pod on a node can communicate with other pods/services in a cluster without any NAT

- System services (kubelet, daemonsets) on a node can communicate with all pods on that node

- No extorsion for a particular solution - the operators can decide which solution will fulfill their own assumptions

- Networking can be based on a kubenet (using the CNI plugin Bridge), or on CNI

Fig. 2 Kubernetes networking

CNI—a short introduction

Before I delve into a more detailed description of our workaround, let me briefly describe what CNI really is. CNI (Container Network Interface) is a project developed under the umbrella of Cloud Native Computing Foundation. It consists of a specification and libraries for writing plugins to configure network interfaces in Linux containers. Of note, here is that CNI is container runtime-agnostic and does not require Kubernetes. It can be run under any container orchestration platform. Another great feature is that CNI is easy to implement. Basically, it is as simple as having your app read from “standard in” (STDIN) and write to “standard out” (STDOUT). Last but not least, CNI considerably simplifies networking with Kubernetes, as Kubernetes itself does not need to know all the intricacies of your network. This knowledge is kept by plugins. In short, CNI allows you to extend the network properties of your containers by adding new plugins.

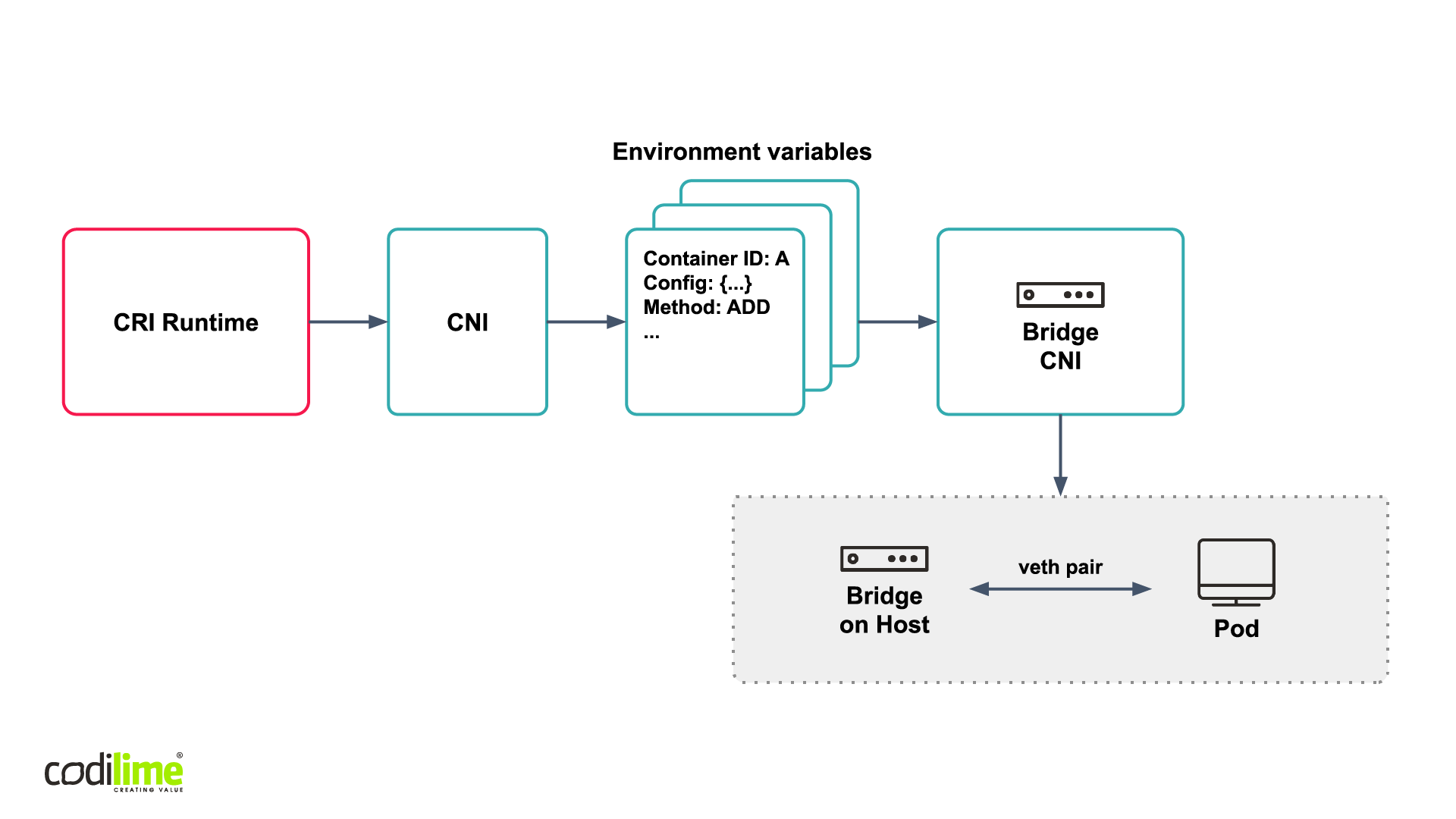

Now, let’s have a quick look at the CNI Bridge plugin. Essentially, it connects a pod with a veth pair to a bridge on a host. So, we have Kubernetes that calls CNI via CRI runtime. It sends a number of environment variables, and uses a STDIN and STDOUT order to interact with the Bridge CNI. For example, it sends a container ID, so that a given CNI plugin knows which container to interact with. It will send the entire CNI configuration for a given pod and also the current method. In this particular case (see Figure 3), the method ADD is used—which happens at the time when a pod is created (other methods, including DEL occur when a pod is deleted). Along with pod ID and CNI configuration, Add is sent to the Bridge CNI plugin to establish a connection between the pod and the bridge on the host.

Fig. 3 The Bridge CNI plugin

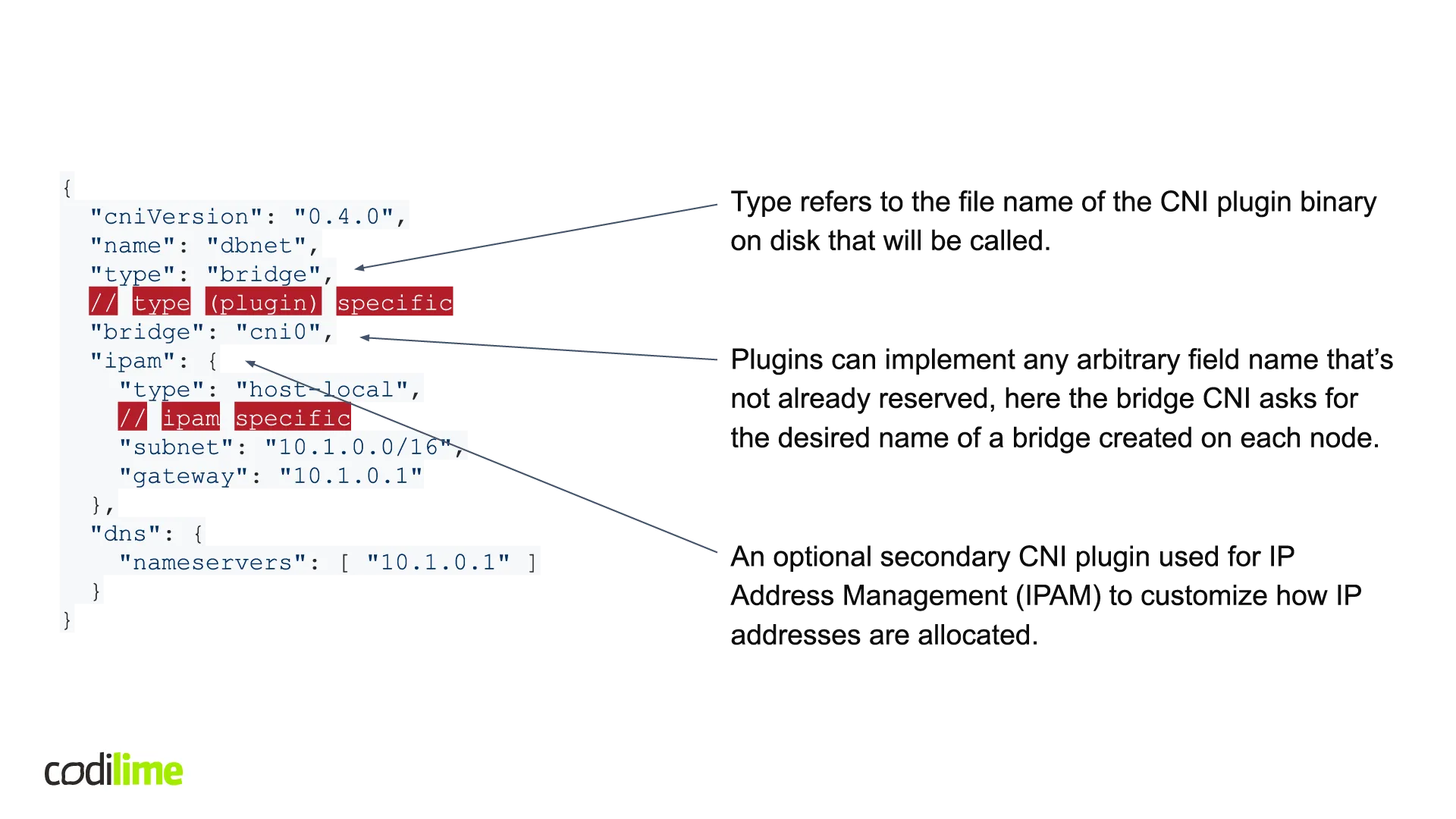

When describing the Bridge CNI plugin, I mentioned that a CNI configuration was passed. Let’s see what’s inside such a configuration (see Figure 4, for your convenience below each figure with the code I have also added an editable version of the code). CNI configurations are JSON files that can be defined pretty freely. There are only three fields that were reserved by CNI specification. The first one is the “type” field, which refers to the file name of the on-disk CNI plugin binary that will be called. Secondly, plugins can implement any arbitrary field name that’s not already reserved (the bridge CNI asks for the desired name of a bridge created on each node). Thirdly, there is also a field for an optional secondary CNI plugin used for IP Address Management (IPAM) to customize how IP addresses are allocated. To help you better understand what I’m talking about, I’ve added a figure with examples of the code and an explanation of each. Other fields can be used pretty freely.

Fig. 4 A dissection of CNI configurations

{

"cniVersion": "0.4.0",

"name": "dbnet",

"type": "bridge",

// type (plugin) specific

"bridge": "cni0",

"ipam": {

"type": "host-local",

// ipam specific

"subnet": "10.1.0.0/16",

"gateway": "10.1.0.1"

},

"dns": {

"nameservers": [ "10.1.0.1" ]

}

}CNI multiplexer

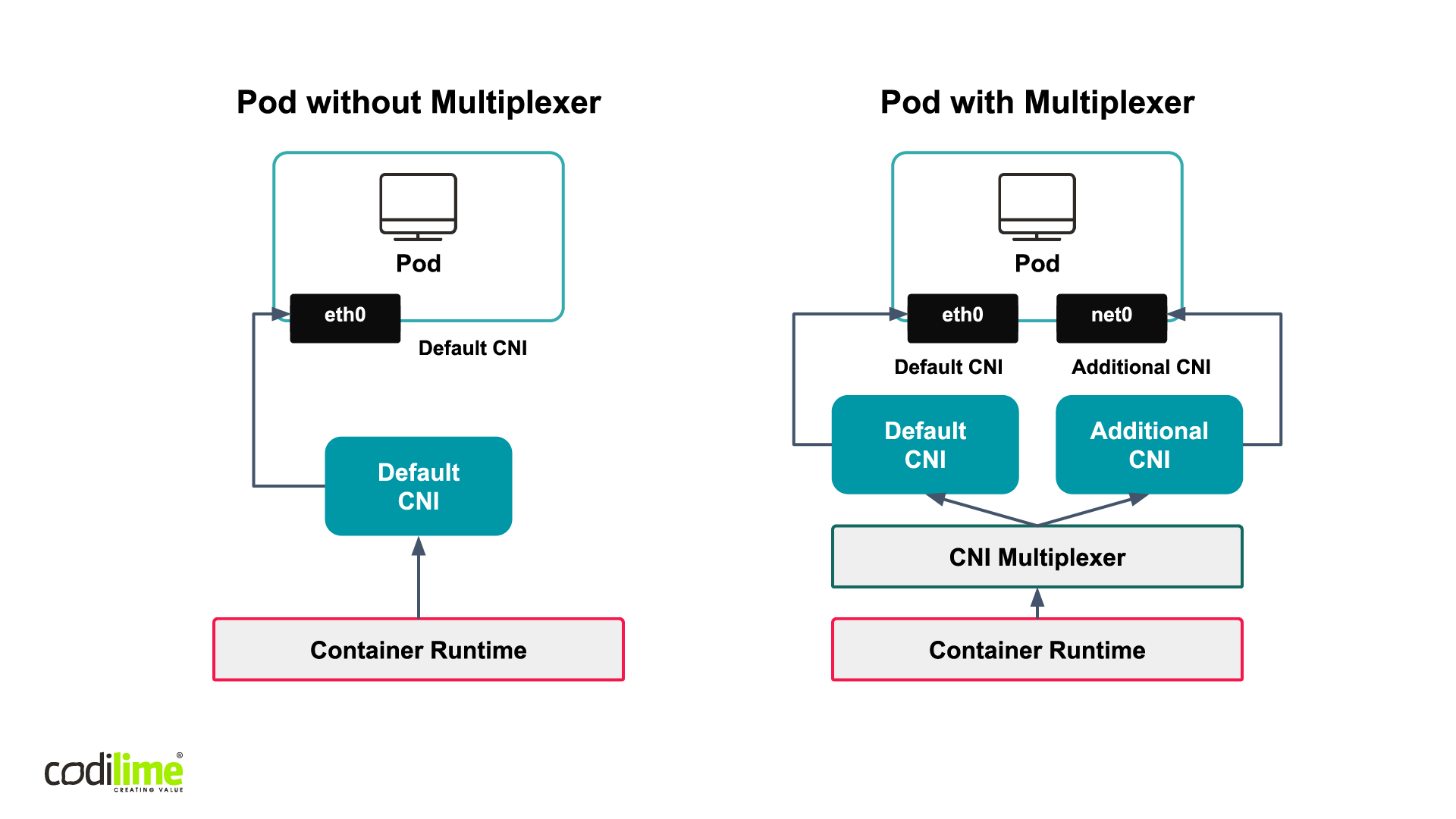

The scenario described above works well when there is one CNI plugin. A container orchestrator connects via CRI runtime with the default CNI plugin which establishes the default network connection with a pod. Of course, more connections may sometimes be called for, and when they are, you’ll also need a CNI multiplexer, which will work as a “meta plugin” and make it possible to call other CNI plugins (see Figure 5).

Fig. 5 CNI Multiplexers

NetworkAttachmentDefinitions for multiple networks in Kubernetes

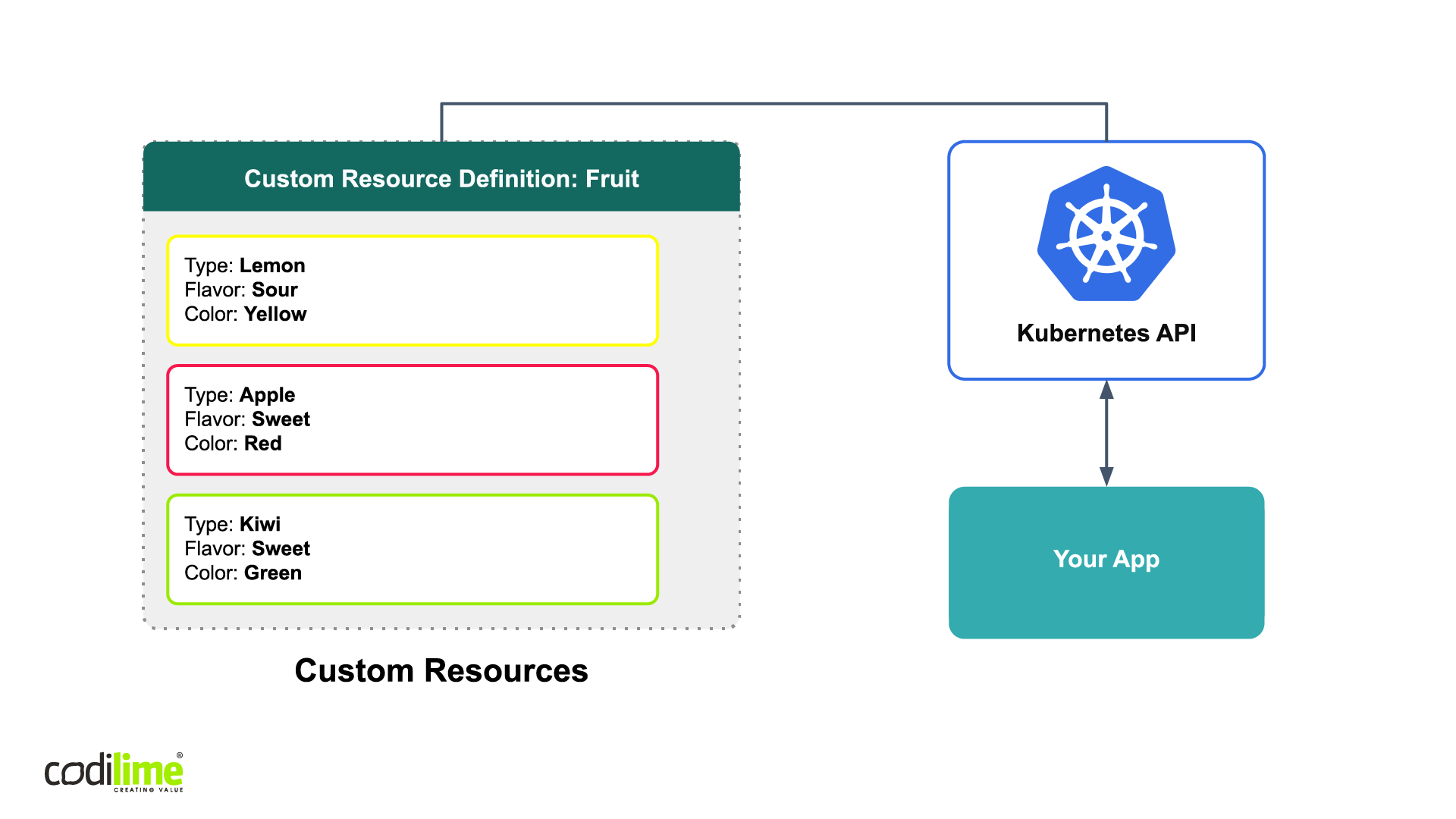

Here custom resources in Kubernetes become important as they extend the Kubernetes API. In other words, these resources use the Kubernetes API to store information specific to your application. A custom resource definition (CRD) file defines the properties of the object, as shown in Figure 6. The CRD file defines the resource called Fruit, which consists of various types of data (type, flavor, color). By having application data accessible via the Kubernetes API, we create a kind of lingua franca for applications to interact with one another within Kubernetes.

Fig. 6 Custom resources in Kubernetes

NetworkAttachmentDefinitions is a custom resource that defines a Spec of the requirements and procedures for attaching Kubernetes pods to one or more logical or physical networks. This includes requirements for plugins using the Container Network Interface (CNI) to attach pod networks. NetworkAttachmentDefinitions is the brainchild of the Network Plumbing Working Group, which came together at KubeCon 2017 in Austin, Texas, US. Having a standardized custom resource helps to normalize the user experience, which can help when users move between technologies, or if platforms change their technology to achieve multiple network attachments.

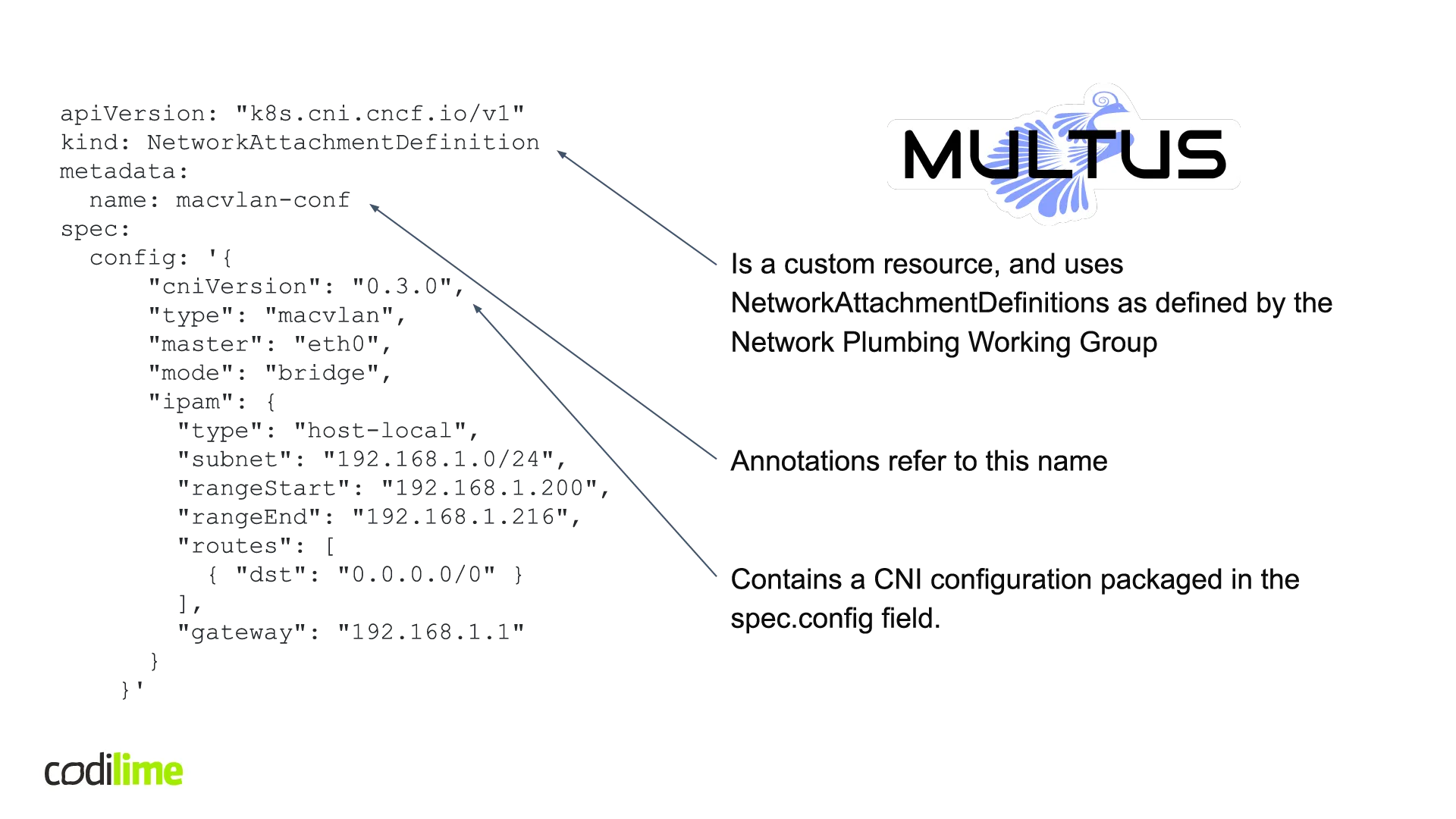

Multus CNI

Below, I’ll take a look at how NetworkAttachmentDefinitions work in practice by analysing a reference implementation. For this implementation, a metaplugin called Multus CNI was used. It uses NetworkAttachmentDefinitions as defined by the Network Plumbing Working Group. Below, you can see YAML file with NetworkAttachmentDefinitions. The specific name (name: macvlan-conf) will be used by pods connecting to this network. Inside this NetworkAttachmentDefinitions there’s a CNI configuration embedded and packaged in the spec.config field. This is very important, as when we use any other CNI plugin, there is already a CNI configuration in place that can be used by this plugin (see Figure 7).

Fig. 7 Multus CNI as a reference implementation of NetworkAttachmentDefinitions

apiVersion: "k8s.cni.cncf.io/v1"

kind: NetworkAttachmentDefinition

metadata:

name: macvlan-conf

spec:

config: '{

"cniVersion": "0.3.0",

"type": "macvlan",

"master": "eth0",

"mode": "bridge",

"ipam": {

"type": "host-local",

"subnet": "192.168.1.0/24",

"rangeStart": "192.168.1.200",

"rangeEnd": "192.168.1.216",

"routes": [

{ "dst": "0.0.0.0/0" }

],

"gateway": "192.168.1.1"

}

}CNI-Genie—a simple case

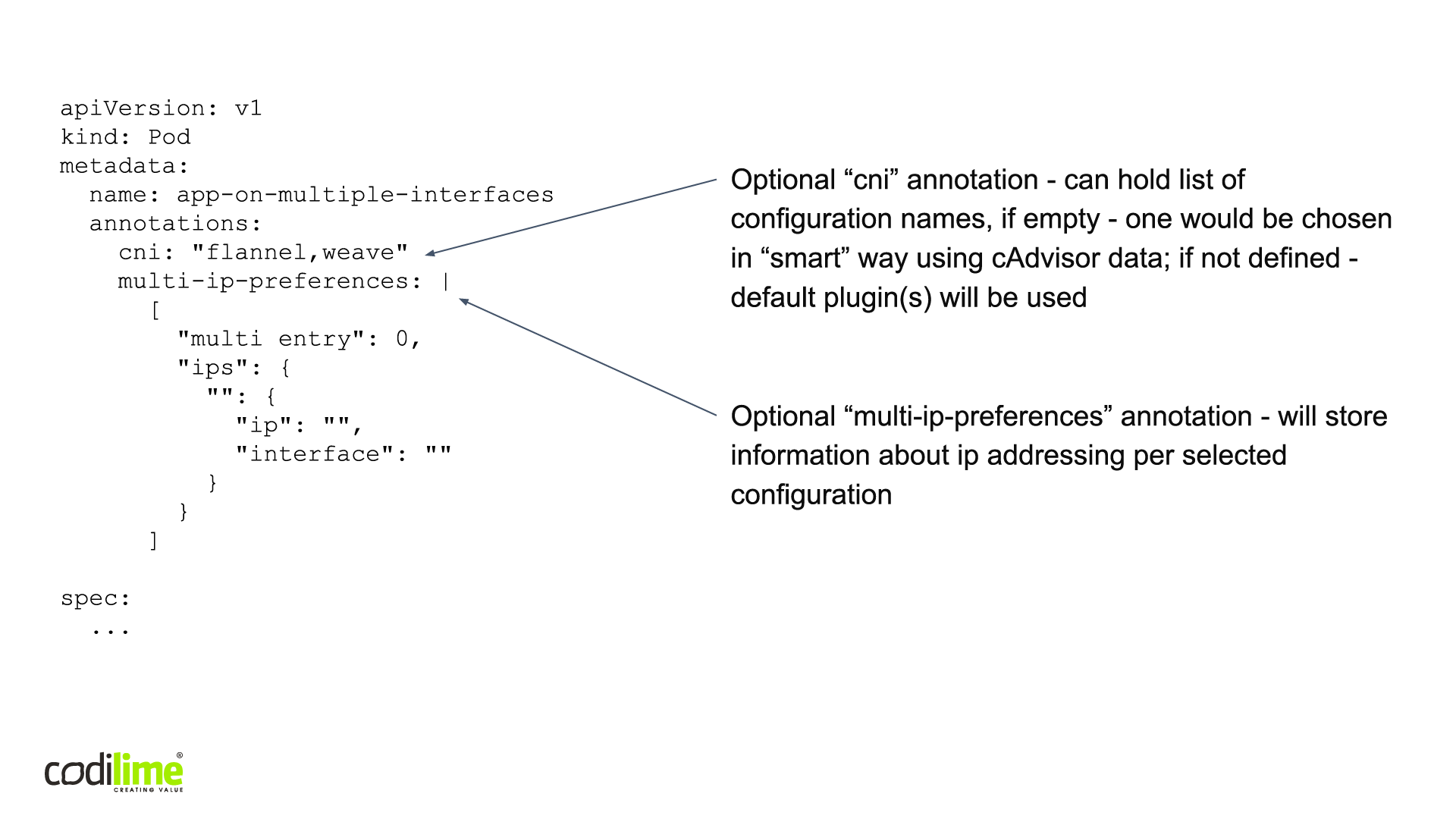

CNI-Genie is an example of a multiplexer. It has several working modes, one of which is based on a custom annotation in the form of a comma-separated list containing the names of CNI configurations to be called. If it is empty, one would be chosen in a “smart” way using cAdvisor data. If not defined, default plugin(s) will be used. This allows you to select which CNI plugin should be called for a specific port. Additionally, there is an optional “multi-ip-preferences” annotation that will store information about the ip addressing per the selected configuration. In this way the user is informed of which ip addresses are configured for which ports (see Figure 8).

Fig. 8 CNI-Genie—a simple case

apiVersion: v1

kind: Pod

metadata:

name: app-on-multiple-interfaces

annotations:

cni: "flannel,weave"

multi-ip-preferences: |

[

"multi entry": 0,

"ips": {

"": {

"ip": "",

"interface": ""

}

}

]

spec:

...CNI-Genie—Network attachments case

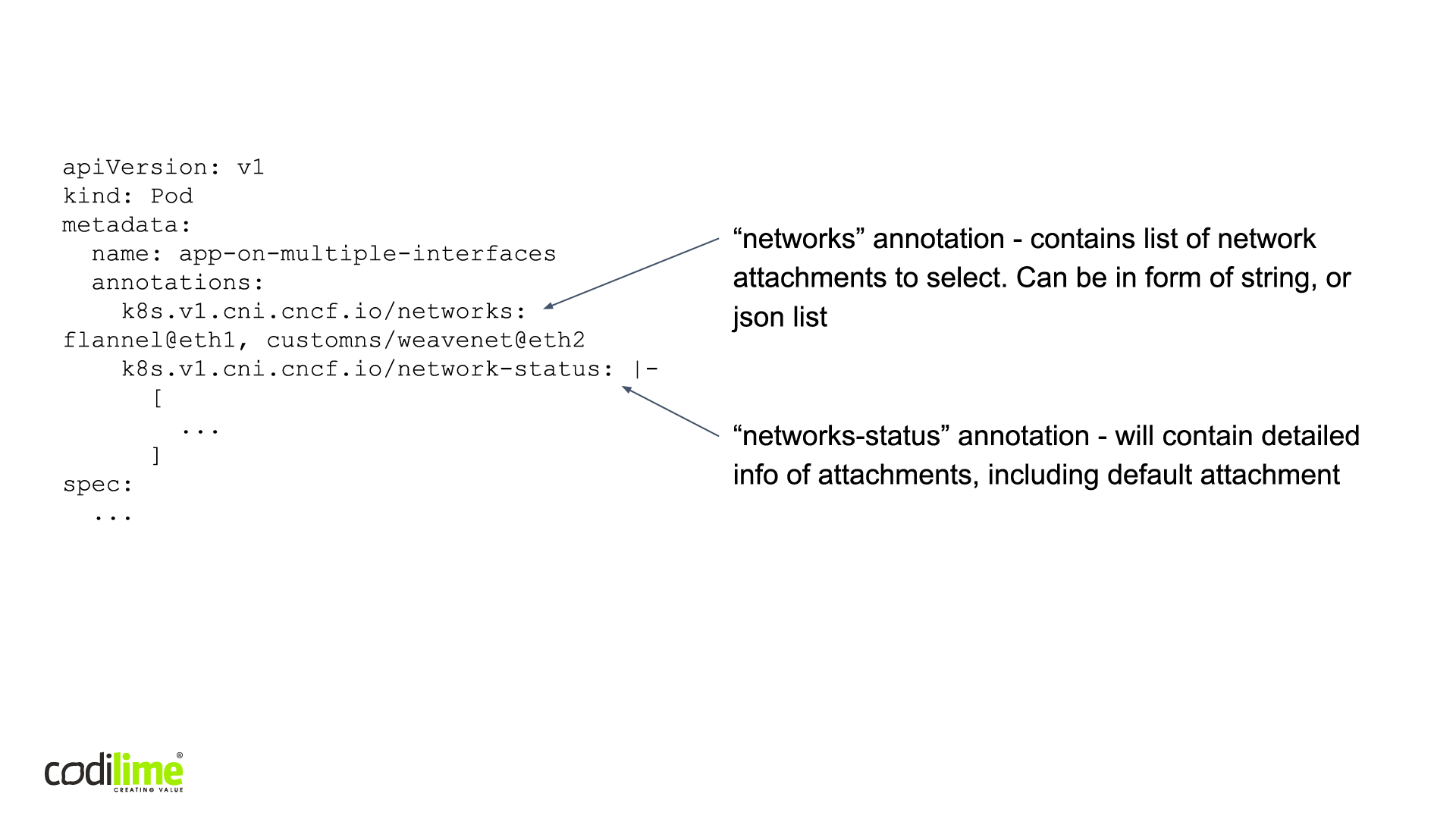

Another option is to use the NetworkAttachements specification. Here there are two ways to specify annotation. The “networks-status” annotation will contain detailed information on attachments, including the default attachment. On the other hand, the “networks” annotation contains a list of network attachments to select. These can be in the form of a string or JSON list. NetworkAttachements are also CRVs, so they can be run in different time spaces. Moreover, they have their own way of showing the status of interfaces and their addresses, which will be contained in specific fields in other annotations. Additionally, in the case of NetworkAttachements, all pods will be attached to a default network not included on the list (see Figure 9).

Fig. 9 CNI-Genie—Network attachments case

apiVersion: v1

kind: Pod

metadata:

name: app-on-multiple-interfaces

annotations:

k8s.v1.cni.cncf.io/networks: flannel@eth1, customns/weavenet@eth2

k8s.v1.cni.cncf.io/network-status: |-

[

...

]

spec:

...Nokia DANM—yet another approach to multiple networks

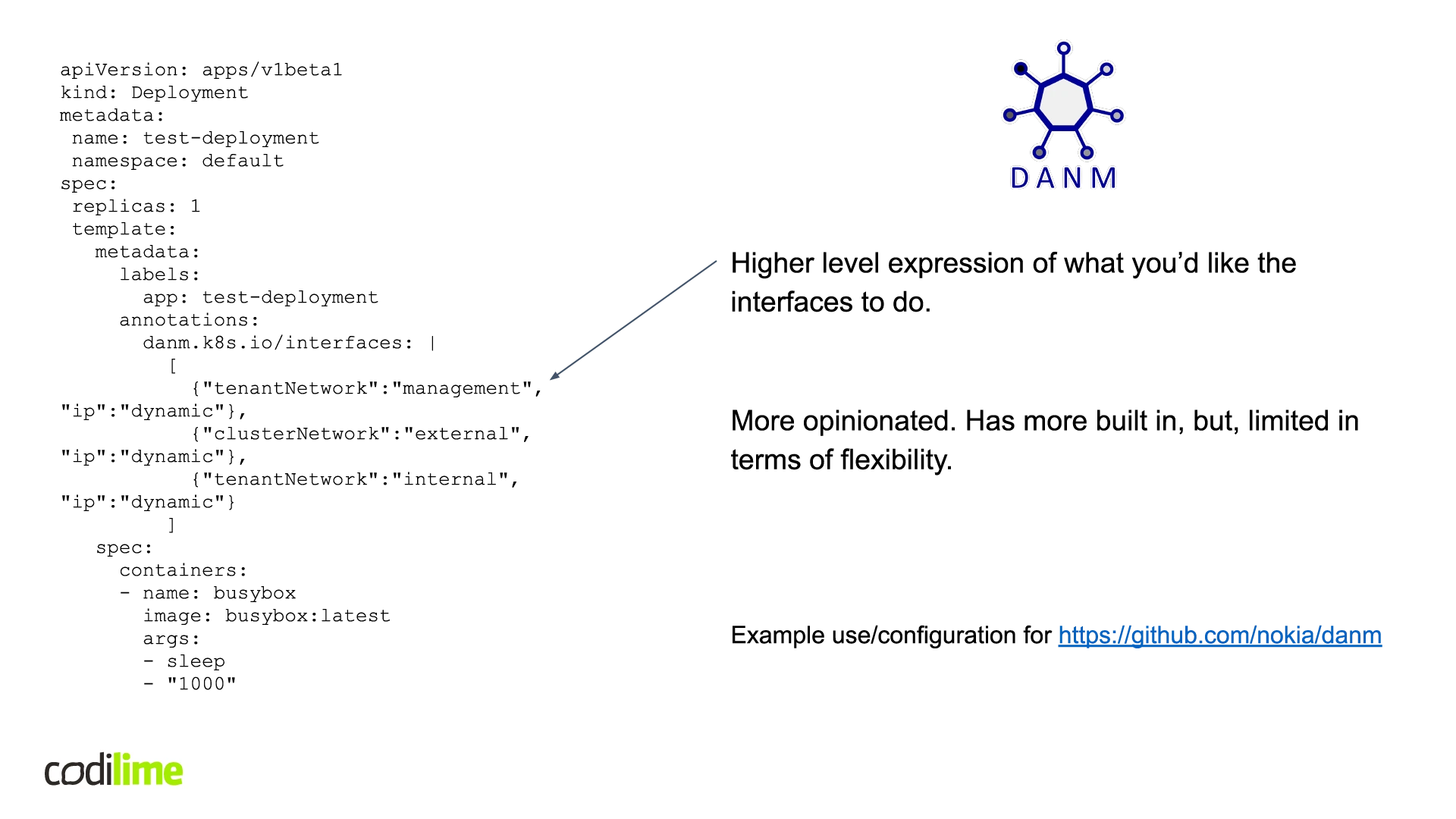

I’ll now have a look at an approach called DANM. A technology developed by Nokia, DANM is not a multiplexer, but it does allow you to attach multiple networks in a specific way developed by Nokia. It takes a more opinionated approach and allows you to have a higher level expression of what you’d like the interfaces to do. It does not support all CNI plugins, but those that are supported can be used in a more detailed way. So, it is up to the user which option to choose. You can use the CNI plugins of your choice to have more granular control or use DANM to stick to a more opinionated approach. An example of use/configuration can be found here (see Figure 10).

Fig. 10 Nokia DANM

apiVersion: apps/v1beta1

kind: Deployment

metadata:

name: test-deployment

namespace: default

spec:

replicas: 1

template:

metadata:

labels:

app: test-deployment

annotations:

danm.k8s.io/interfaces: |

[

{"tenantNetwork":"management", "ip":"dynamic"},

{"clusterNetwork":"external", "ip":"dynamic"},

{"tenantNetwork":"internal", "ip":"dynamic"}

]

spec:

containers:

- name: busybox

image: busybox:latest

args:

- sleep

- "1000"Other solutions for multiple networks

Still other solutions are available. Foremost among them is Tungsten Fabric, which enables you to connect different stacks like Kubernetes, Mesos/SMACK, OpenShift, OpenStack and VMware. It also makes for a smooth transition between Virtual Network Functions (VNFs) and Container Network Functions (CNFs). When multiple interfaces are used, Tungsten Fabric uses the standard of network attachments, but not as a meta plugin calling other normal plugins. It uses the CNI configuration in the same way as Multus or CNI-Genie do, but all the work under the hood is done by its own code.

Knitter is a CNI plugin that allows you to connect multiple networks and provide multiple interfaces to a pod. Unfortunately, it has some serious drawbacks. It’s speaking CNI protocol and makes it possible to connect multiple interfaces to a single pod, but its CNI responses are faked, predefined and have the same content all the time. So, it cannot be used with Virtlet and other runtimes expecting the date are correct.

Finally, Tungsten Fabric, Knitter and Nokia DANM are not multiplexers, i.e. they don’t run other CNI plugins under the hood, but instead do the job using their own code.

Adding a native network object to Kubernetes source code

Last but not least, I have prepared a proposal for adding a native Kubernetes network object. This proposal is discussed on Network Plumbing Working Group (if you are interested, sign up to know more). Using a CNI multiplexer is a workaround that does not change Kubernetes source code, but it may not suffice in more complicated cases. Of course, users will sometimes want to cover more complicated scenarios, e.g. use separate networks for different purposes like storing data, separating the control plane from the data plane, or providing a higher level of security or different tiers of network. To tackle this challenge I have prepared a proposal that will make it possible to natively support CNI multiplexers in k8s. It can be handled on the runtime level (name of the CNI config) and easily improved later (exposing it by CRI networks available for a particular nodes, exposing this information by kubelet e.g. in node status as a list of strings, or using this information by scheduler during pods allocation on nodes). Hopefully, this proposal will be included in some of the upcoming Kubernetes releases.

Summary

As you can see, there are several workarounds that allow you to use multiple networks without changing Kubernetes source code. In the long run, it would be reasonable to expect Kubernetes to support multiple networks natively. This is the rationale behind my proposal of a native Kubernetes network object.