Have you ever wondered how to drop a packet in Linux OS? Well, there are a few methods to do it. In this blog post we want to share them with you. These methods are not restricted to just firewall rules and can be divided into six main categories:

-

iptables - responsible for filtering packets handled by TCP/IP stack

-

ebtables - the same as above, but mostly focused on layer 2 (the comparison between ISO/OSI and TCP/IP models is presented in our infographic)

-

nftables - successor of iptables+ebtables

-

ip rule - a tool designed to build advanced routing policies

-

IP routing - transferring packets according to the routing table

- BGP Flow Spec (how to deploy iptables’ rules using BGP protocol)

-

QOS - using the tc filter command design for QOS filtering

-

eBPF with a little help from XDP

-

filtration on OSI layer 7 using a user space application

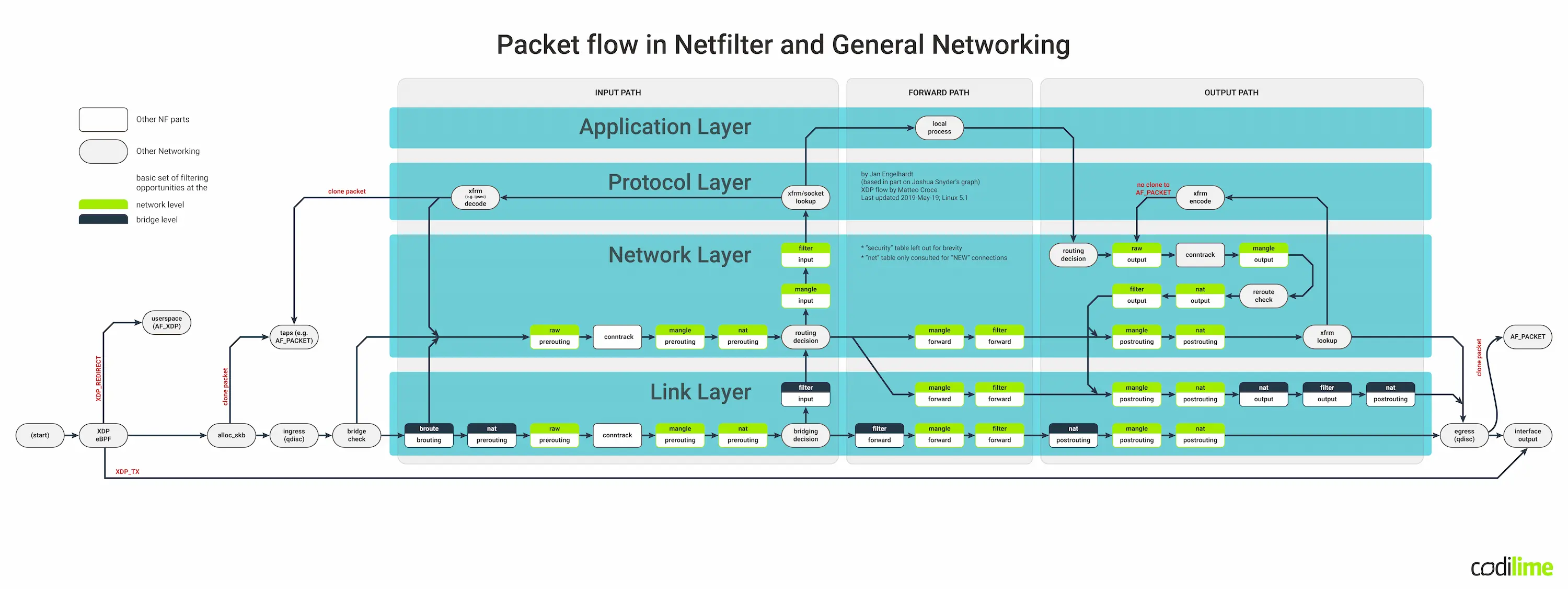

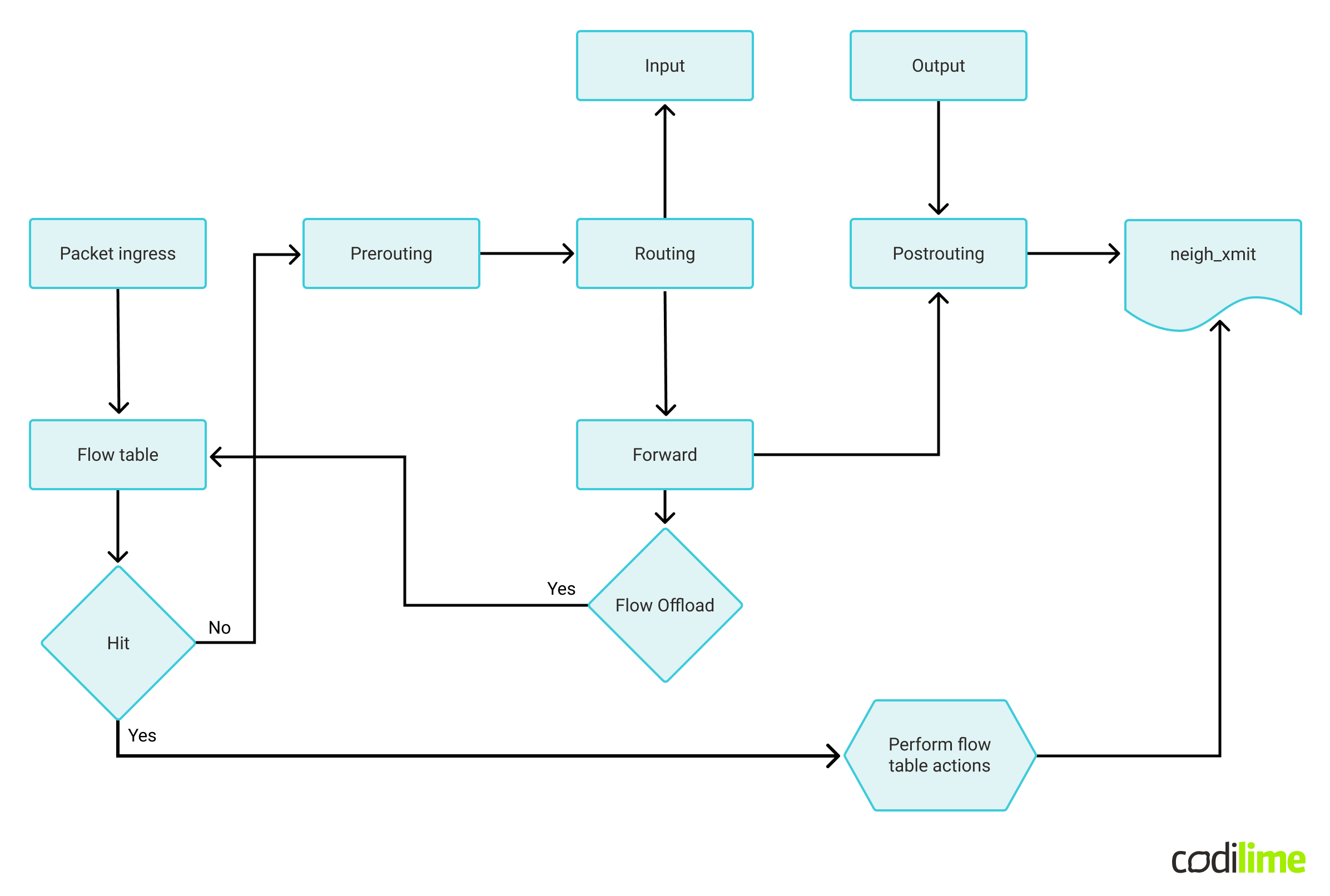

Before we begin: just a quick reminder about packet flow in the Linux kernel:

Fig. 1 Packet flow in the Linux kernel, source Wikipedia

If you are experiencing network issues in your Linux environment, remember that our guide on Linux network troubleshooting can help you diagnose and resolve the problems with your network configuration.

Packet dropping with iptables

iptables is the most popular method when it comes to processing packets in Linux. Filtering rules can be divided into 2 types which differ considerably: stateful and stateless. Using stateful filtration allows the packet to be analyzed in the context of the session status, e.g. whether the connection has already been established or not (the packet initiates a new connection). However, easy state-tracking has its price - performance. This feature is considerably slower than the stateless solution, but it allows more actions to be performed. The rule below is an example of dropping packets based on their state (here: a new connection):

# iptables -A INPUT -m state --state NEW -j DROPBy default, every packet in iptables is processed as stateful. To make an exception, it is necessary to perform a dedicated action in a special table “raw”:

# iptables -t raw -I PREROUTING -j NOTRACKIt is always good to remember that the iptables method allows you to perform classifications on multiple layers of the OSI model, starting from layer 3 (source and target IP address) and finishing on layer 7 (project l7-filters that is, sadly, not developed any more).

When handling stateful packets, it is also vital to remember that the conntrack module for iptables uses only a 5-tuple which consist of:

- source and target IP address

- source and target port (for TCP/UDP/SCTP and ICMP where other fields take over the role of the ports)

- protocol

This module does not analyze an input/output interface. So, if a packet that has already been processed goes (in other VRF) to the IP stack once again, a new state will not be created. There is, however, a solution for this issue which involves using zones in the conntrack module that allow packets interface $X to be assigned to the zone $Y.

# iptables -t raw -A PREROUTING -i $X -j CT --zone $Y

# iptables -t raw -A OUTPUT -o $X -j CT --zone $YTo sum up: due to its many features the iptables drop method is slow. It is possible to speed it up by switching off the tracking of session states, but the performance increase (in terms of PPS) will be small. In terms of new connections/seconds, the gain will be bigger. More on this subject can be found here.

In the long run it is planned to migrate the iptables method to Berkeley Packet Filter (BPF), then the major speed/performance increase can be expected.

ebtables

In case we want to go lower than layer 3, we have to switch the tool to ebtables. ebtables allows us to work on from layer 2 up to layer 4. For example, if we want to drop packets where the MAC address for IP 172.16.1.4 is different than 00:11:22:33:44:55, we can use the rule below:

# ebtables -A FORWARD -p IPv4 --ip-src 172.16.1.4 -s ! 00:11:22:33:44:55 -j DROPIt is important to remember that the packets passing through Linux bridge are analyzed by FW rules. This is managed by the below sysctl parameters:

- net.bridge.bridge-nf-call-arptables

- net.bridge.bridge-nf-call-ip6tables

- net.bridge.bridge-nf-call-iptables

More info can be found in this article. If we want to gain performance it is advised to disable those calls.

nftables for dropping packets

The aim of nftables (introduced by 3.13 kernel) is to replace certain parts of netfilter (ip(6)tables/arptables/ebtables), while keeping and reusing most of it. The expected advantages of nftables are:

- less code duplication

- better performance

- one tool to work on all layers

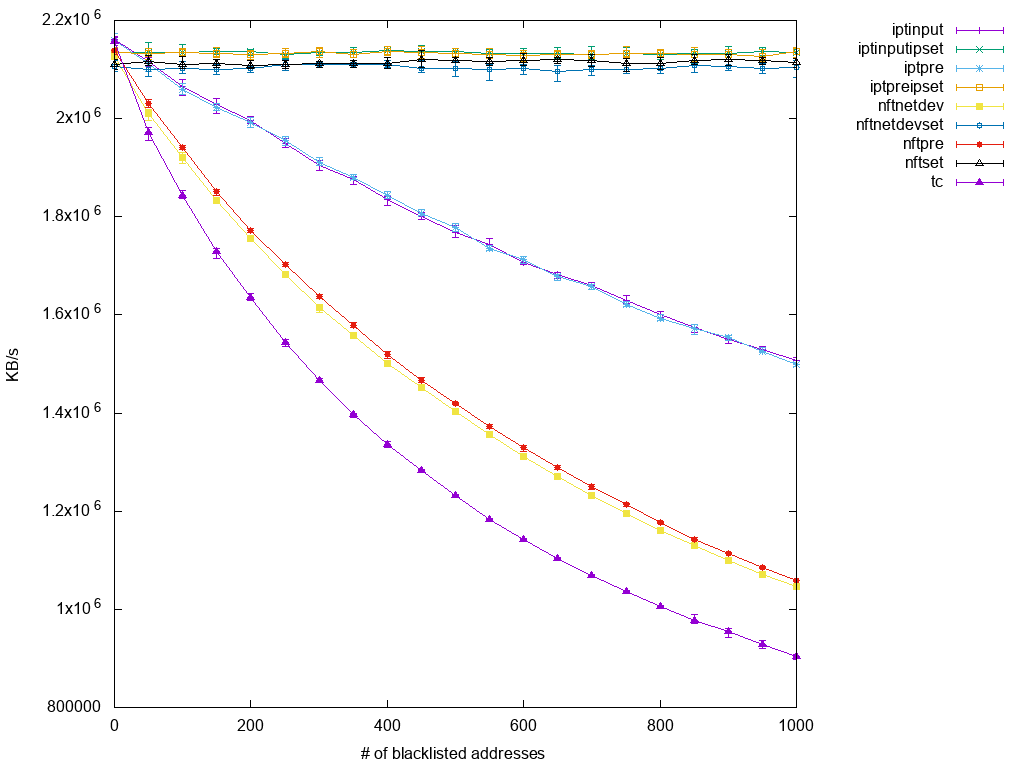

The performance increase test was done by RedHat guys. The following diagram shows the performance drop in correlation with the number of blocked IP addresses:

Fig. 2 Diagram of a performance drop in correlation with the number of blocked IP addresses

nftables are configured via the nft utility placed in the user space. To drop a TCP packet, it is necessary to run the following commands (the first two are required, as the nftables do not come with default tables/chains):

# nft add table ip filter

# nft add chain ip filter in-chain { type filter hook input priority 0\; }

# nft add rule ip filter in-chain tcp dport 1234 dropNote: whenever both nftables and iptables are used on the same system, the following rules apply:

| nft | Empty | Accept | Accept | Block | Blank |

|---|---|---|---|---|---|

| iptables | Empty | Empty | Block | Accept | Accept |

| Results | Pass | Pass | Unreachable | Unreachable | Pass |

Sometimes nft can become slow, especially when dealing with larger amounts of rules, complicated chains, or when running on low performance hardware such as ARM devices. For such scenarios, one can accelerate performance by caching actions assigned for specific flows (ACCEPT, DROP, NAT, etc.) and bypass further checking of ntf rules if a packet can be assigned to an already existing flow. The following diagram shows this idea in detail:

Fig. 3 Example performance acceleration of nft, source

Performance gain strongly depends on existing complexity but in the case of weak processors (such as ARM64) and complicated nft rules (such as in VyOS) one can expect a 2-3x throughput increase.

Enabling such features is relatively easy - one has only to add an extra rule:

table inet x {

flowtable f {

hook ingress priority 0; devices = { eth0, eth1 };

}

chain y {

type filter hook forward priority 0; policy accept;

ip protocol tcp flow offload @f

counter packets 0 bytes 0

}

}Each packet hitting ingress will be checked against flow table “f”. Each TCP packet traversing chain “y” will be offloaded.For more information one can check the kernel nft documentation at the following link.

ip rule

The ip rule tool is a less known method used to create advanced routing policies. As soon as the packet passes through the firewall rules, it is up to the routing logic to decide if the packet should be forwarded (and where), dropped or do something else entirely. There are multiple possible actions, the stateless NAT is one of them (not a commonly known fact) however, the “blackhole” is the one:

# ip rule add blackhole iif eth0 from 10.0.0.0/25 dport 400-500ip rule is a fast, stateless filter used frequently to reject DDOS traffic. Unfortunately, it has a drawback: it allows us to work only on IP SRC/DST (layer 3), TCP/UDP ports (layer 4) and based on input interface.

Keep in mind that the interface loopback (lo) plays an important role in the ip rulesets. Whenever it is used as an input interface parameter (iif lo), it decides whether the rule is applied to the transit traffic or to the outgoing traffic coming from the host on which this rule is being configured. For example, if we want to drop transit packets destined to the address 8.8.8.8, the following rule can be used:

# ip rule add prohibit iif not lo to 8.8.8.8/32IP routing

Another method of filtration is to use routing policies. While it is true that this method works only for the layer 3 and in the case of target addresses, this filtration method can be used simultaneously on many machines thanks to routing protocols like BGP. The simplest example of dropping the traffic directed to the 8.8.8.8 address is the below rule:

# ip route add blackhole 8.8.8.8When talking about routing policies, it is worth mentioning the following kernel configuration parameter (which is often forgotten):

# sysctl net.ipv4.conf.all.rp_filterIf by default it is set to “1”, it checks the reverse path of every packet before moving it further to the routing stack.

So, if a packet from A.B.C.D address appears in the eth0 interface while the routing table says that a path to A.B.C.D address leads via eth1 interface (we have a case of asymmetric routing here), such a packet will be dropped, if the rp_filter parameter* (global or for that interface) is not equal to 0.

BGP Flow Spec

When describing the BGP routing protocol, it is compulsory to say a few words about BGP Flow Spec. This extension is described in RFC 5575 and is used for packet filtering. Linux (with a little help from FRR daemon) supports the above feature by translating the received NLRI to the rules saved in ipset/iptables. Currently, the following filtering features are supported:

- Network source/destination (can be one or the other, or both)

- Layer 4 information for UDP/TCP: source port, destination port, or any port

- Layer 4 information for ICMP type and ICMP code

- Layer 4 information for TCP Flags

- Layer 3 information: DSCP value, Protocol type, packet length, fragmentation

- Misc layer 4 TCP flags

Thanks to this approach it is possible to dynamically configure FW rules on many servers at the same time. More info can be found in the FRR documentation here.

Dropping packets with QOS

It is not commonly known that it is also possible to perform packet filtration at the level of QOS filters. The tc filter command, responsible for classifying the traffic, is used in this case. It allows us to filter traffic on L3 and L4 statelessly. For example, to drop the GRE traffic (protocol 47) coming to the eth0 interface, the following commands can be used:

# tc qdisc add dev eth0 ingress

# tc filter add dev eth0 parent ffff: protocol ip prio 1 u32 match ip protocol 47 0x47 action dropNetem

When talking about packets dropping and QOS, one must mention a queuing discipline, Netem, that is a separate category of QOS. In Netem it is possible to simulate problems with network topology by defining actions like:

- loss

- jitter

- reorder

For example, if we want to emulate 3% losses on the output interface eth0, we can use the following command:

# tc qdisc add dev eth0 root netem loss 3%By default netem works for outgoing traffic. However You can apply it also for incoming traffic as well with a little help of IFB (Intermediate Functional Block pseudo-device). Please check this web page for detailed example: How can I use netem on incoming traffic

eBPF

In short, eBPF is a specific virtual machine that runs user-created programs attached to specific hooks in the kernel. Such programs are created/compiled at the userspace and injected into the kernel. They can classify and perform actions upon network packets.

Currently, there are four popular ways in which the user can attach eBPF bytecode into the running system in order to parse network traffic:

- Using QOS action attach to:

tc filter add dev eth0 ingress bpf object-file compiled_ebpf.o section simple direct-action - Using firewall module: iptables w/ -m bpf --bytecode (which is not of any interest to us, as the BPF would be used only for packet classification)

- Using eXpress Data Path (xdp) - with a little help of the ip route command

- Using the CLI tool named bpfilter (in early stage of development)

The second approach based on tc filter is explained well in Jans Erik’s blog. We will concentrate on the XDP approach, as it has the best performance. To drop a UDP packet destined for 1234 port, we first need to compile the following BPF code:

#include <linux/bpf.h>

#include <linux/in.h>

#include <linux/if_ether.h>

#include <linux/ip.h>

#include <linux/udp.h>

#define SEC(NAME) __attribute__((section(NAME), used))

#define htons(x) ((__be16)___constant_swab16((x)))

SEC("udp drop")

int udp_drop(struct xdp_md *ctx) {

int eth_off = 0;

void *data = (void *)(long)ctx->data;

void *data_end = (void *)(long)ctx->data_end;

struct ethhdr *eth = data;

eth_off = sizeof(*eth);

struct iphdr *ip = data + eth_off;

struct udphdr *udph = data + eth_off + sizeof(struct iphdr);

eth_off += sizeof(struct iphdr);

if (data + eth_off > data_end) {

return XDP_PASS;

}

if (ip->protocol == IPPROTO_UDP && udph->dest == htons(1234)) {

return XDP_DROP;

}

return XDP_PASS;

}

char _license[] SEC("license") = "GPL";With the following command:

$ clang -I/usr/include/x86_64-linux-gnu -O2 -target bpf -c udp_drop.c -o udp_drop.oThen we can attach the bytecode to kernel using this CLI:

# ip link set dev eth0 xdp obj udp_drop.o sec .textAt the end we can stop the XDP program just by unloading it:

# ip link set dev eth0 xdp offNote: code in this section was based on the Lorenzo Fontana udp-bpf example located here.

DPDK

DPDK is an alternative to eBPF - the idea is to process the packet by bypassing the kernel network stack entirely or partially. In DPDK packets are processed fully in userspace. While this might sound slower than processing in the kernel, it isn’t. Processing a packet in the kernel means passing all the chains and hooks described earlier. We don't always need that. If we just need to do the routing or routing and filtering, we skip QOS, RPF, l2 filter, stateful FW, etc. For example, we can use OVS in DPDK mode and configure only the things that we need - nothing else. In OVS (as soon as we set everything up) we can drop a packet with the following rule:

# ovs-ofctl add-flow br0 "table=0, in_port=eth0,tcp,nw_src=10.100.0.1,tp_dst=80, actions=drop"From now on, for any packet incoming on port eth0 matching source IP 10.100.0.1, the TCP protocol and HTTP port will be dropped. Setting up OVS might be tricky but the performance increase is huge making this process worthwhile. To gain even more speed some NICs (especially smartNICs) can offload many OVS tasks and as a result drop packets before they reach the CPU.

An alternative to OVS is VPP - this is even faster in some scenarios so it might be worth taking a look – link here.

L7 Filtering

Kernel modules support packets (with a few exceptions like BFP, dedicated kernel modules, NFQUEUE action in iptables) in layers 2-4. In the case of layer 7, it is necessary to use dedicated user space applications. The most popular are SNORT and SQUID. SNORT IPS can work to analyze traffic, searching for known exploits in two modes: TAP and INLINE.

TAP mode:

Fig. 4 TAP mode

In the TAP mode (Snort is only listening to the traffic), a packet can be dropped only by sending a “TCP RST” packet (for TCP session) or “ICMP Admin Prohibited” for the UDP one. However, this approach will not prevent the forbidden packet from being sent, but it should prevent further transmission.

INLINE Mode:

Fig. 5 INLINE mode

For INLINE mode (Snort is transmitting the traffic in question) we have more flexibility and the following actions are possible:

- drop - block and log the packet

- reject - block the packet, log it, and then send a TCP reset if the protocol is TCP or an ICMP port unreachable message if the protocol is UDP

- sdrop - block the packet but do not log it

You can find more about filtering rules in SNORT in its technical documentation here.

SQUID (designed to forward/cache HTTP traffic only), on the other hand, allows web pages to be filtered, e.g. based on the host contained in HTTP headers or using SNI in the case of HTTPS protocol. For example, if an ACL containing domain names like *.yahoo.com *.google.com is defined:

acl access_to_search_engines dstdomain .yahoo.com .google.com

acl access_to_search_engines_ssl ssl::server_name .yahoo.com .google.comand action DENY is assigned to them:

http_access deny access_to_search_engines

ssl_bump terminate access_to_search_engines_ssl...then every client which is using that HTTP proxy service, while trying to open the google.com or yahoo.com webpage will encounter an error.

Of course, the list of apps to filter the traffic based on layer 7 is much longer

and contains such positions as:

- mod_rewrite in apache configuration files

- query_string in NGINX vhost config

- ACLS in haproxy

GPU

Sometimes you might want to filter packets by using a very long ACL (e.g. an ACL built from 10k rules) while dealing with traffic containing a huge number of flows (>100k simultaneous sessions) at the same time. In such cases, OVS or NFT flowtable might not be enough as neither of them are suited for such scale. In the case of OVS/NFT you will overflow the hashing table and when you disable the hashing table/stateful FW, the length of the ACL will kill performance. You might consider offloading this task to a smartNIC or DPU but such devices are rare or expensive.

At Codilime, we tried to take a bit of a different approach to such problems and we decided to use a common Nvidia GPU. While this was a purely R&D project (not yet ready for a production environment) you still might find it helpful. More details along with the presentation can be found here.

Summary

As you can see, there are quite a few methods allowing you to intentionally drop packets in Linux. All of them are built for specific purposes and I hope that you can use them in practice. What is missing here is the performance of these methods, but this is a topic for another blog post.

Original post date 08/08/2019, update date 12/20/22.