One of the most crucial qualities an experienced developer should have is knowing how to avoid reinventing the wheel. When creating a web application, there are a few common functionalities that you need to provide no matter what your application does or what technology you use. Usually you want your application to at least support:

- Secure connection (TLS),

- Authentication,

- High availability,

- Load balancing,

- Circuit breaking,

- Canary deployments,

- Observability,

- Rate limiting.

In this blog post, I will tell you about Envoy proxy - a solution which will not only provide you with the functionalities described above but also with many other neat features. Additionally, Envoy gives you an opportunity to write your own features and trigger them to handle L4/L7 traffic, so even if some functionality is missing from your perspective you can extend your own Envoy instance with it rather than modify the internals of your web application.

With Envoy you will be able to focus on the precise business logic your application is supposed to deliver. You do not need to worry about functions related to networking.

What is Envoy?

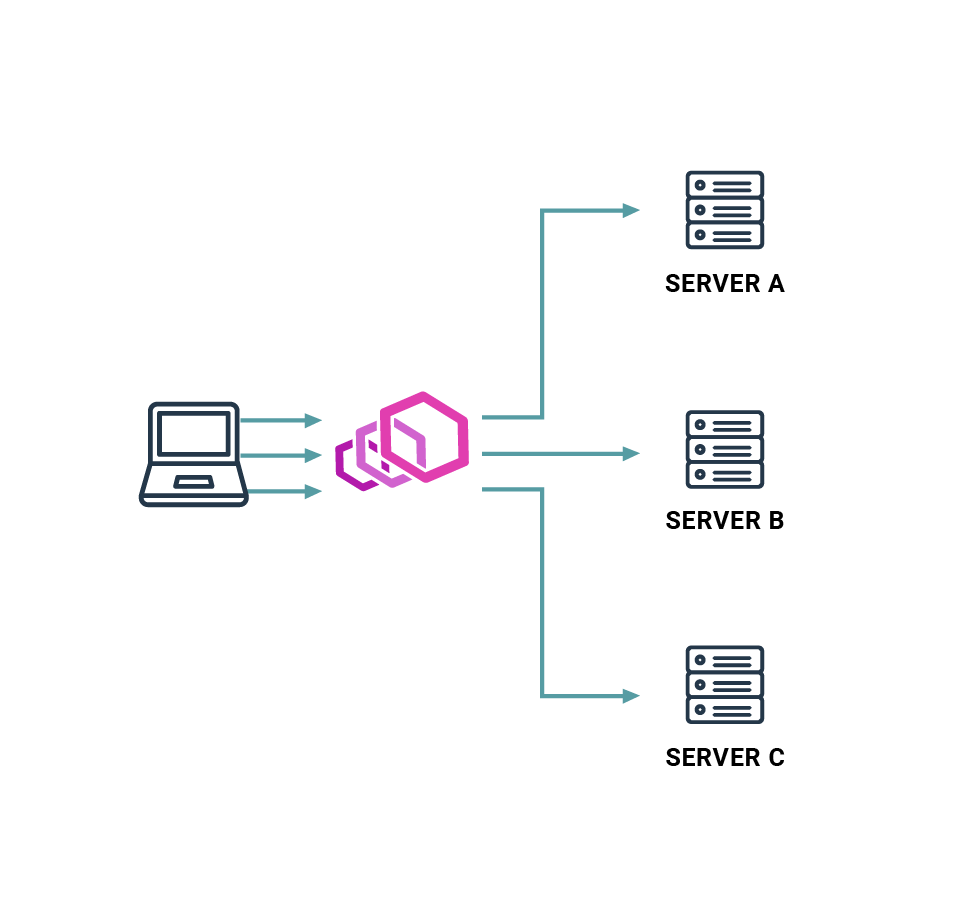

Envoy is a reverse proxy which serves UDP/TCP/HTTP traffic by performing certain operations. To give you a few ideas:

Fig. 1 What is Envoy?

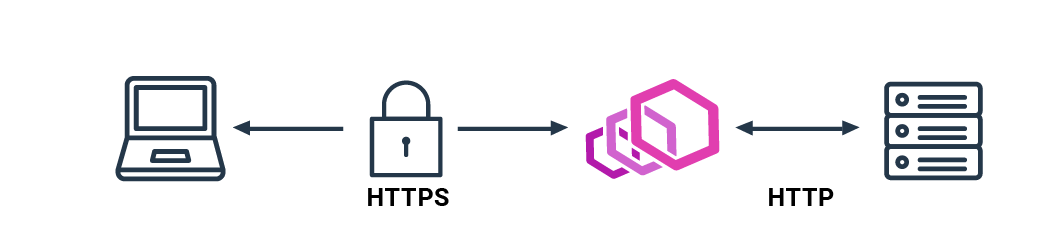

TLS Termination

Let’s imagine your application does not support the TLS protocol and there is no easy way to update the app. What you can do is to download the Envoy binary and provide a simple configuration file with your certificate. In such a case, the Envoy instance put in front of your application will ensure the connection is secure. It will terminate an HTTPS session and start a new one based on the HTTP protocol (it is assumed that the latter connection takes place in a secure network).

Fig. 2 Envoy proxy TLS termination

Load Balancing

Envoy creates a layer of abstraction over a group of servers, capable of propagating requests. This is called a cluster.

Fig. 3 Envoy proxy load balancing

With that abstraction you’re not only able to specify a list of servers. You can also choose an algorithm for choosing the next machine. You can specify the weight of a single target to focus a bigger load on the stronger machine. Additionally, you can specify health check rules to detect when the machine is down to avoid sending requests there.

This way, at the cost of a few additional configuration lines, you can achieve high availability (HA). Another powerful feature is so-called “canary deployment” which allows you to deploy an updated configuration on a subset of servers only, so you can test your configuration without the risk of breaking the entire application (even if the updated servers fail there will be other servers which were not updated and still working).

As we are talking about HA setup, one cool thing about Envoy is that you can change its configuration when an instance is running without any risk that you will interrupt someone’s traffic. Envoy updates its configuration but keeps existing connections and handles them according to the previous envoy configuration.

Fig. 4 HA setup with Envoy proxy

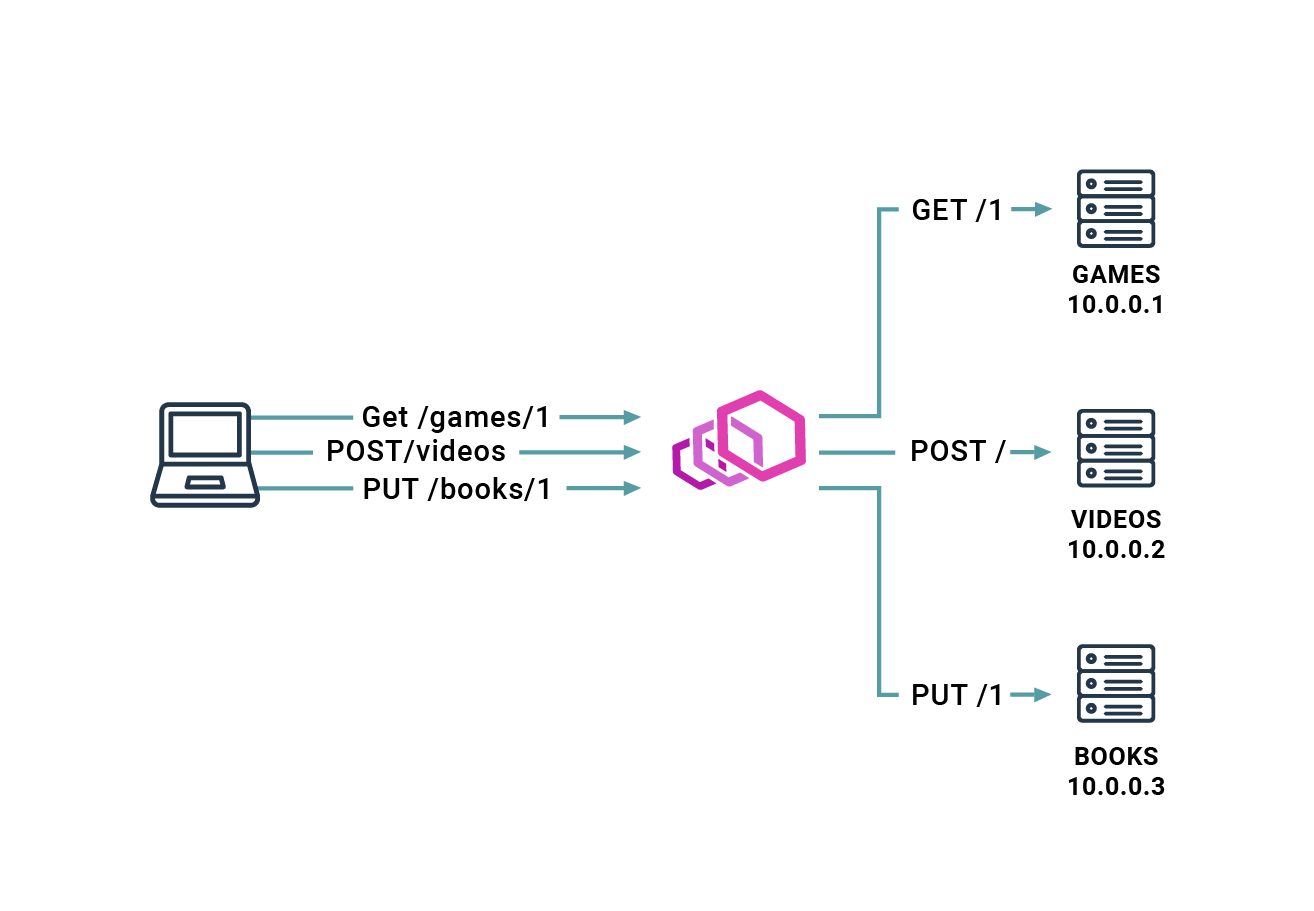

Envoy may also help you when your application grows with additional backend servers. You can easily select the target supposed to serve a given request based on the endpoints indicated. You can even rewrite this endpoint to adapt it to the form expected on the target. It makes Envoy great middleware for your application.

A few other neat features I could mention are:

- Dropping traffic (based on parameters such as source IP address or destination port).

- Compressing responses when an “accept-encoding” header is detected.

- Collecting traffic statistics (Envoy gives you access to plenty of predefined stats).

- Collecting headers sent by clients.

NGINX/HAProxy with extra steps

There might be a chance that you have already been working with some reverse proxies such as NGINX or HAProxy. In that case, you may want to learn more about Envoy because of:

- Numerous statistics, which are available under the administration endpoint, such as number of requests.

- Discovery services - a solution which allows you to dynamically configure your Envoy instances.

- Custom filters written in C++, Lua or WASM for easily extendable functionality.

Envoy is a pretty fresh solution but its community is growing quickly. The author Matt Klein has written numerous great articles describing how Envoy can save your work, how to use Envoy and what the advantages of Envoy design choices are. To name a few:

- Hot restarts: here,

- Threading model: here,

- Service mesh data plane vs control plane: here,

- Introduction to modern network load-balancing and proxying: here.

There are companies that have already decided to switch their systems from using NGINX/HAProxy to Envoy and they have noticed an improvement in performance, easier configuration and a reduced total number of issues. For example, take a look at the article written by DropBox here.

Envoy and Kubernetes

Up to now, I’ve been talking about using Envoy as a proxy between the client and the server. However, containerized applications (including those based on microservices architecture) have become a standard in application development, so I will take a look into this as well.

When talking about deployments in Kubernetes, which is probably the most popular container orchestration platform today, you might have heard about two solutions used in the area of application networking:

- Kubernetes Ingress,

- Service mesh.

Envoy can be successfully utilized within both of them.

You might also find "Is Network Service Mesh a service mesh?" an interesting read.

Ingress with Envoy

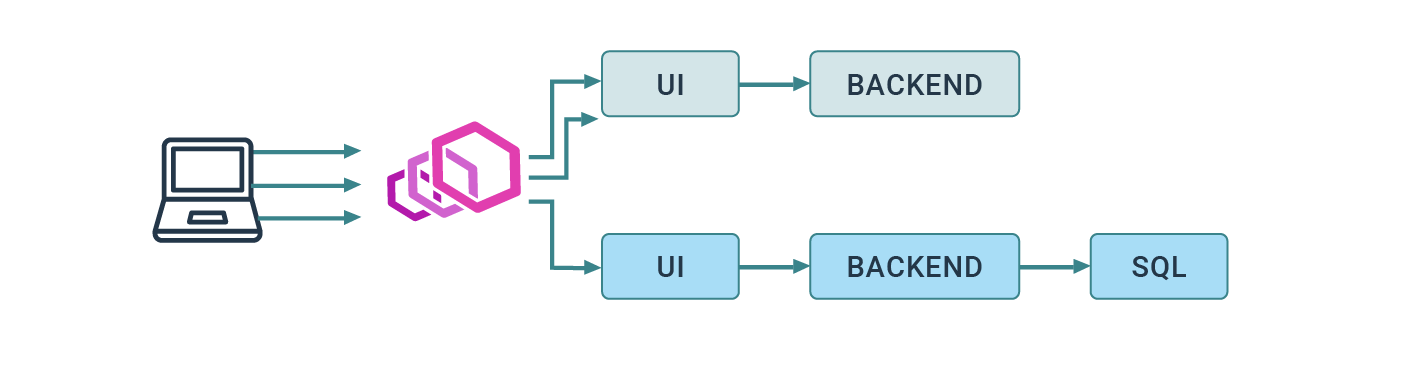

Fig. 5 Ingress with Envoy

The idea of Kubernetes Ingress is to control (at L7) the entire body of traffic coming from outside of the cluster to resources deployed in the cluster. The purpose of that is to reach internal Kubernetes Services using a single entrypoint, which takes responsibility for HTTP routing.

The example implementations offering Ingress functionality for Kubernetes while using Envoy underneath are:

All of these provide their own configurations which wrap the original Envoy configuration.

Service mesh with Envoy

Fig. 6 Service mesh with Envoy

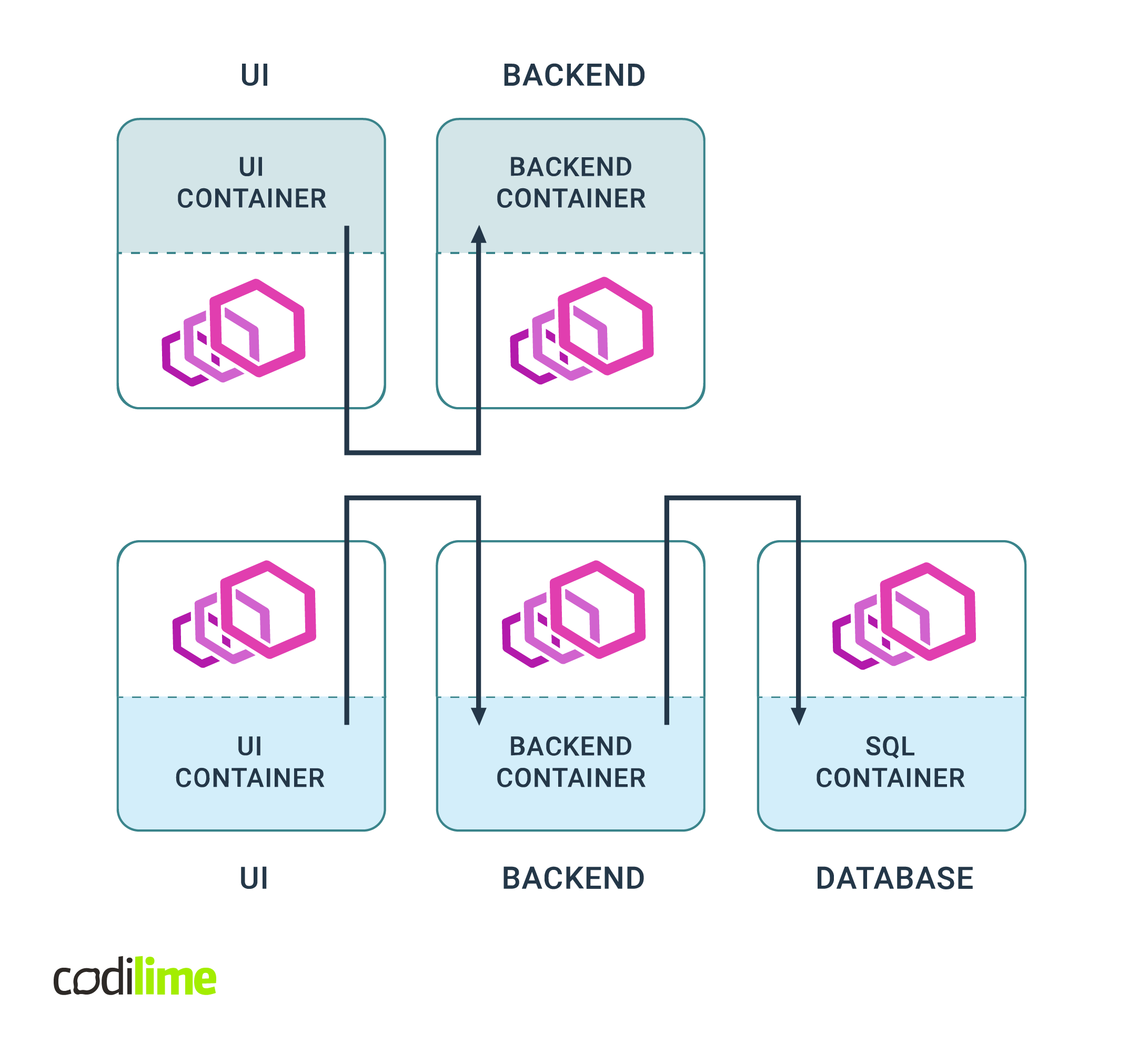

In this approach, you can place an Envoy instance within each Pod in the cluster. With Envoy as a sidecar you may configure it to ensure that communication between workloads running in the cluster will be carried out using Envoy instances acting as proxies.

This approach gives you much more insight into microservices interactions as you can easily track requests sent between components. This greatly improves observability, which is not the case for the Ingress solution in its native form (as it deals with north-south traffic).

Service mesh does come with a price though, as we need to “enhance” every Pod within the cluster with an Envoy instance. Not only does it increase the usage of computing resources, and generate additional latencies but there is also a need to efficiently provide configuration across all of the Envoy instances. Instead of doing that yourself, you can rely on the service mesh control plane, which is the brain of the system that configures and coordinates the behavior of the data plane proxies.

Example service mesh implementations leveraging Envoy are:

Choose wisely

Choosing the right configuration depends on your actual needs. Now, let’s compare all three configurations together - plain Envoy, Ingress and service mesh.

- Envoy can be put close to a particular component, where it will provide missing logic, e.g. TLS, load balancing, etc.

- The Ingress configuration helps you to manage traffic which is coming from outside of the cluster, usually this would mean users connecting to your application (north-south traffic).

- A service mesh configuration lets you analyze what is happening with your application under the hood - you can track communication between application components, gather various types of logs, as well as controlling such traffic (east-west) in a sophisticated way.

In order to use solutions such as Contour or Istio it is strongly recommended that you gain an understanding of Envoy first, at least to some extent. Both of them use Envoy underneath, so the more you know about Envoy, the more consciously you can use the service meshes and Ingress controllers that are based on it.

Conclusions

Envoy is a solution which should solve many of the problems most web applications put in front of you. Rather than implementing the required features on your own it is better to use Envoy after checking which exact configuration fits your needs. Of course, this requires some experience with Envoy. Additionally, for applications based on microservices architecture you don’t want to use “plain” Envoy but instead a service mesh solution like Istio, for example.

If you’re already convinced and want to take a deeper interest in Envoy, the next blog post in this series might be interesting for you as well. There you will find more information from the developer perspective, including how to configure Envoy and how to add your own logic to it.